CovTrace – an application for monitoring and counteracting the Covid-19 pandemic

CovTrace is intended to be an application for monitoring and counteracting the Covid-19 pandemic.

Initially, the platform was to consist of two main components:

- Mobile application (for Android and iOS platforms) for users – available to every citizen with a smartphone with the above-mentioned operating systems.

- Management panel – a web application for managing infection cases, reporting new cases, and monitoring them.

Data processing module – matching data from the phone with the data on identified confirmed cases of infection.

This study presents the results of work on PoC for the data processing module.

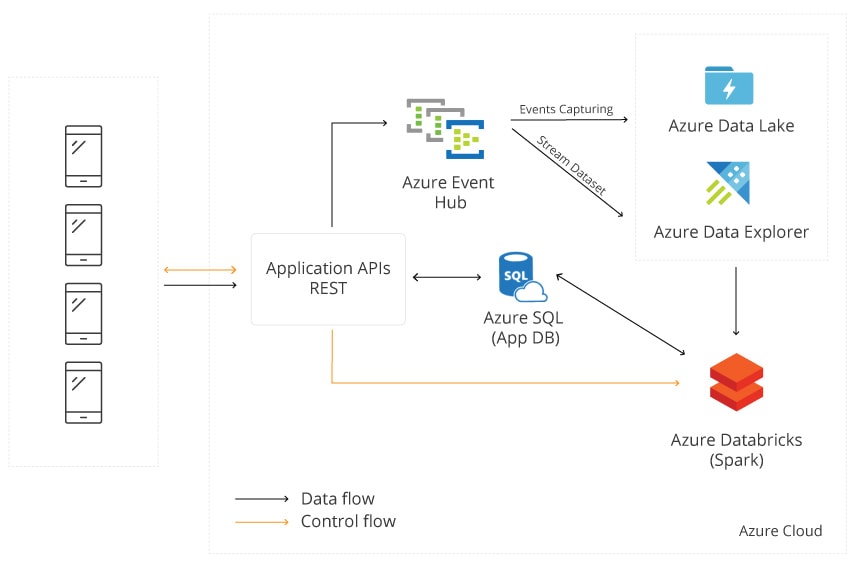

The above shows the overall architecture of the system with a particular focus on the data processing module.

The data are sent from the mobile application to a dedicated endpoint (Rest API), which records telemetry data to Azure Event Hub, which acts as a buffer. Data from Azure Event Hub:

- are saved in Azure Data Lake Storage (option 1),

- are saved in Azure Data Explorer (option 2).

The data processing itself (matching phone data with the data on the identified confirmed infection cases, App Db) takes place on Spark (option 1) or directly on Azure Data Explorer (option 2). Within PoC, a partial solution is used with options 1 and 2.

Data Simulator

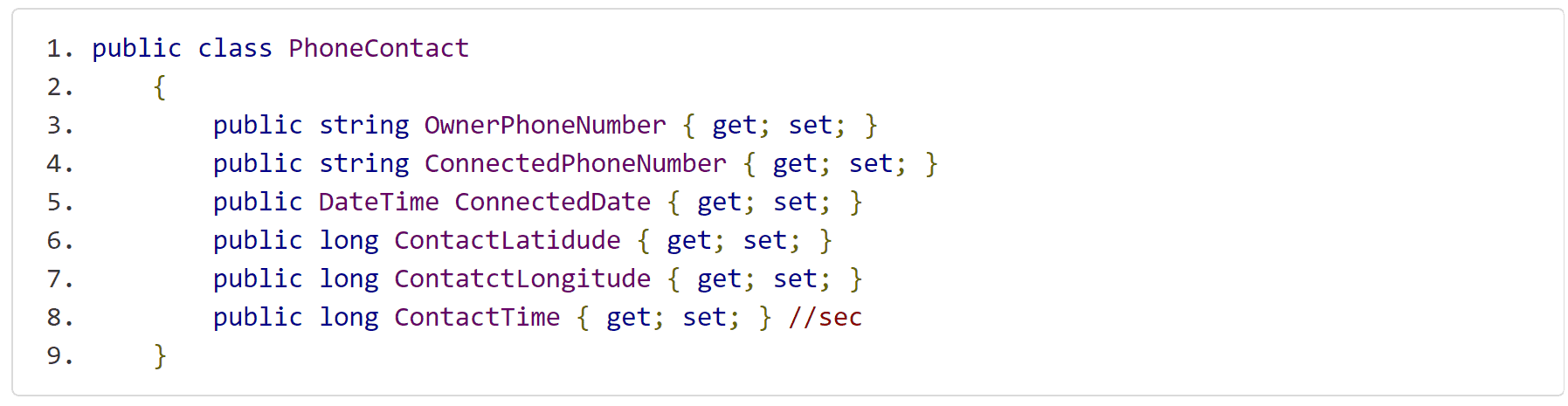

Within PoC, a data simulator is used (similar to this one), which sends the data directly to Azure Event Hub.

Saving Data to Azure Data Lake Storage

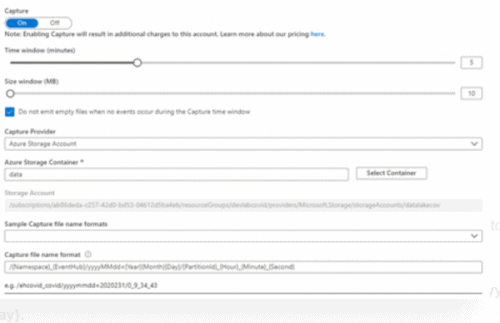

Azure Data Lake Storage Gen2 was chosen as data storage for option 1. Data recording from Azure Event Hub is carried out with the Azure Event Hub Capture mechanism, which allows you to save data from Event Hub to the storage in an Avro format. For PoC, Event Hub is configured as follows:

We should pay particular attention to the “Capture file name format” configuration. It is important to choose a method of data partitioning so that you can efficiently process data in Spark.

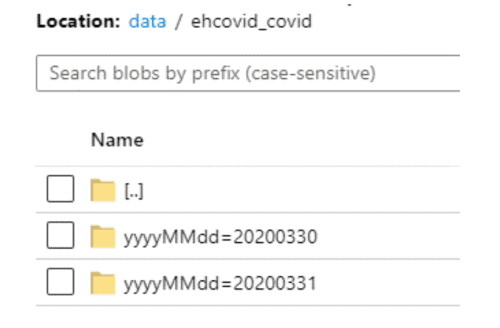

In the following example, the partitioning mechanism was chosen on the basis of date – key yyyyMMdd and its value {Year}{Month}{Day}.

and configuration files /{Namespace}{EventHub}/yyyyMMdd={Year}{Month}{Day}/{PartitionId}{Hour}{Minute}{Second}

The advantage of this solution is its simplicity (no additional code is needed to save data). The disadvantage is the additional cost of the Event Capture mechanism.

Saving Data to Azure Data Explorer

Azure Data Explorer is a scalable service for data mining (especially telemetry data).

Data loading is based on the Data Ingestion mechanism: a built-in function of Azure Data Explorer for automatic loading of data from, e.g. Azure Event Hub.

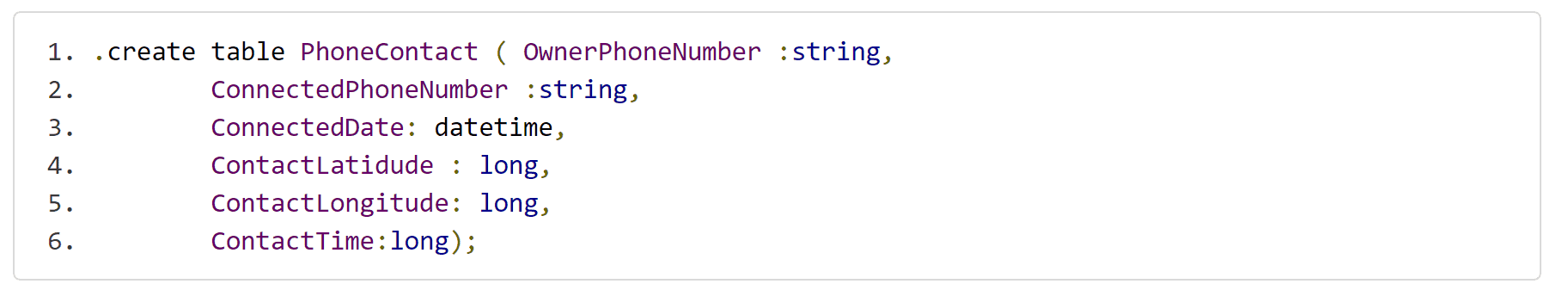

Once a cluster and database have been created, a table is created for storing data:

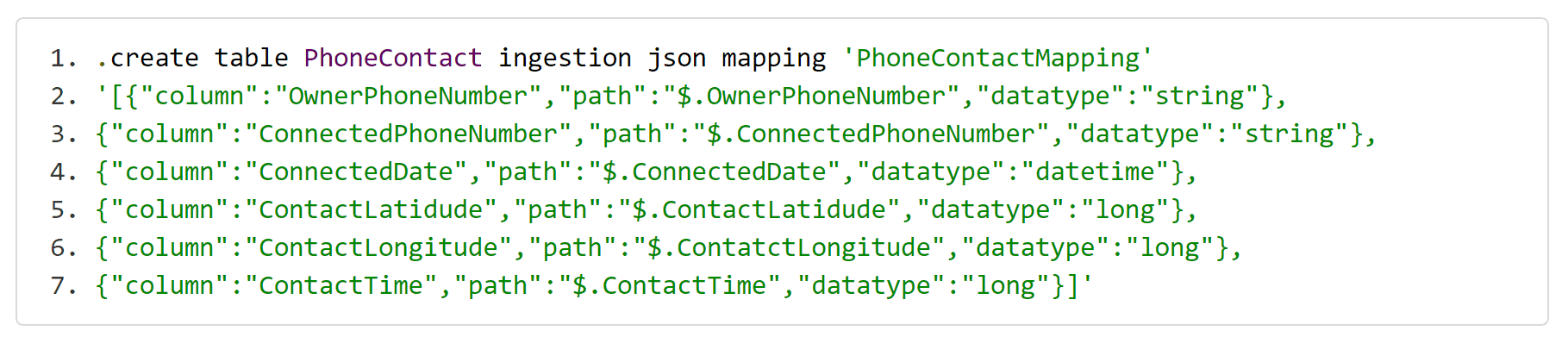

and mapping of message data to the rows in the table:

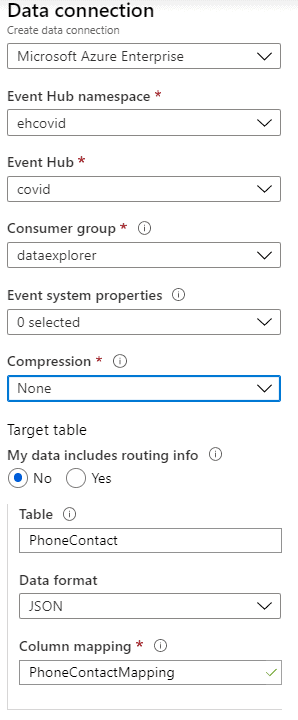

After preparing the table and mapping, the loading configuration boils down to creating Data Ingestion:

It is important to create a dedicated Customer group in Azure Event Hub in advance. The delay when loading data from Azure Event Hub is approximately five minutes. Loaded data (query in KQL):

Again, the advantage of this solution is its simplicity (no additional code is needed to save data), but the disadvantage is the additional cost for maintaining Azure Data Explorer cluster.

Data Processing in Azure Data Explorer

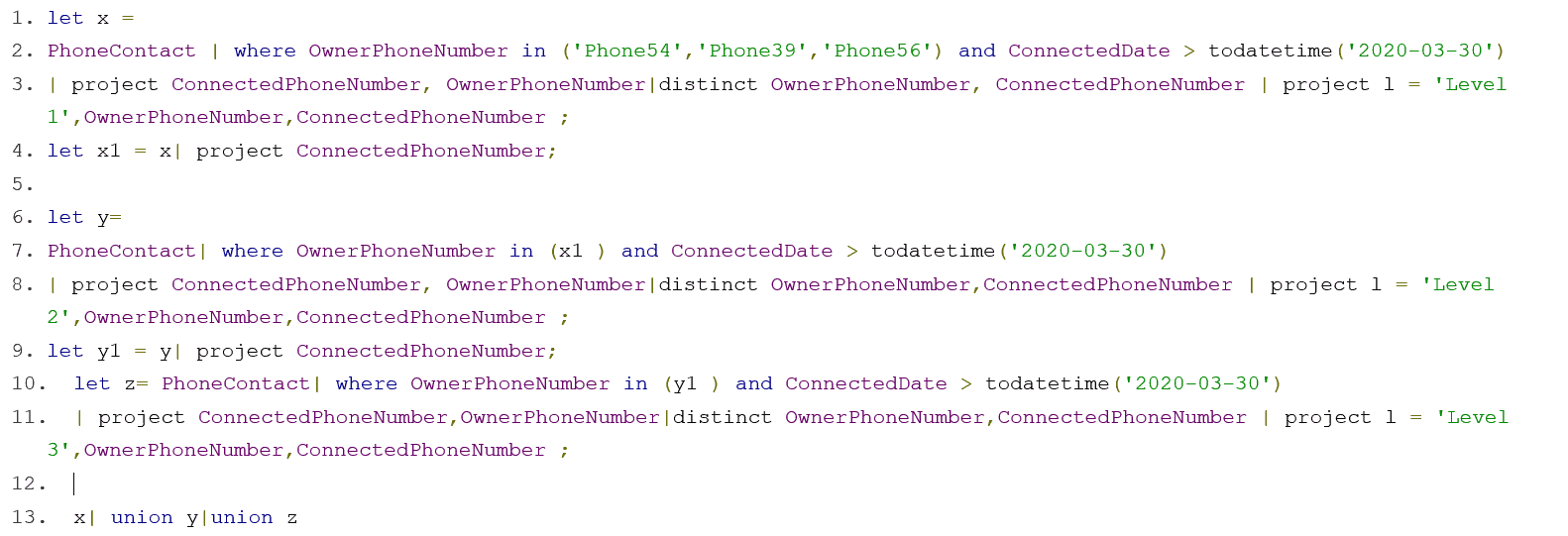

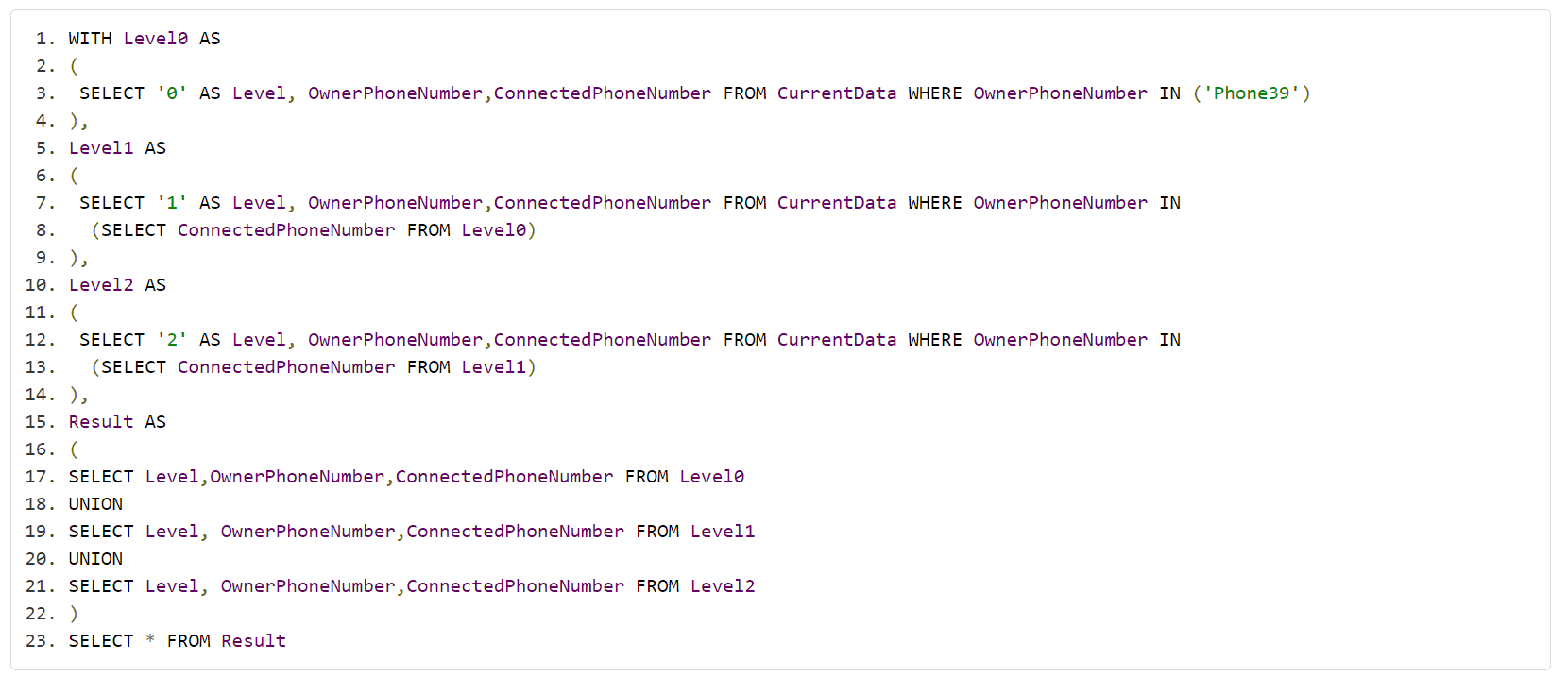

In the case of Azure Data Explorer, data processing, including matching data from phones with the data about identified confirmed cases of infection, can be carried out with a query in KQL. An example of such a query is shown below:

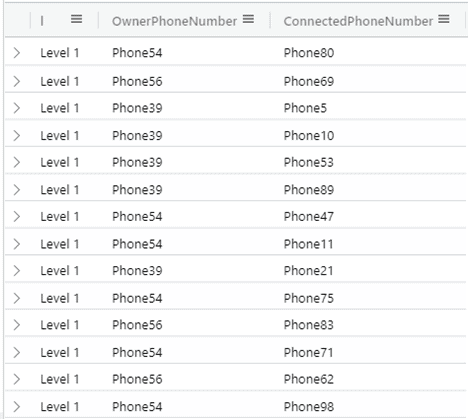

The query above returns information about the contact with people who have the phones ‘Phone54′,’Phone39′,’Phone56’ at the following levels:

- Level 1 – direct contact

- Level 2 – indirect contact through people with the direct contact

- Level 3 – indirect contact through people with the Level 2 indirect contact

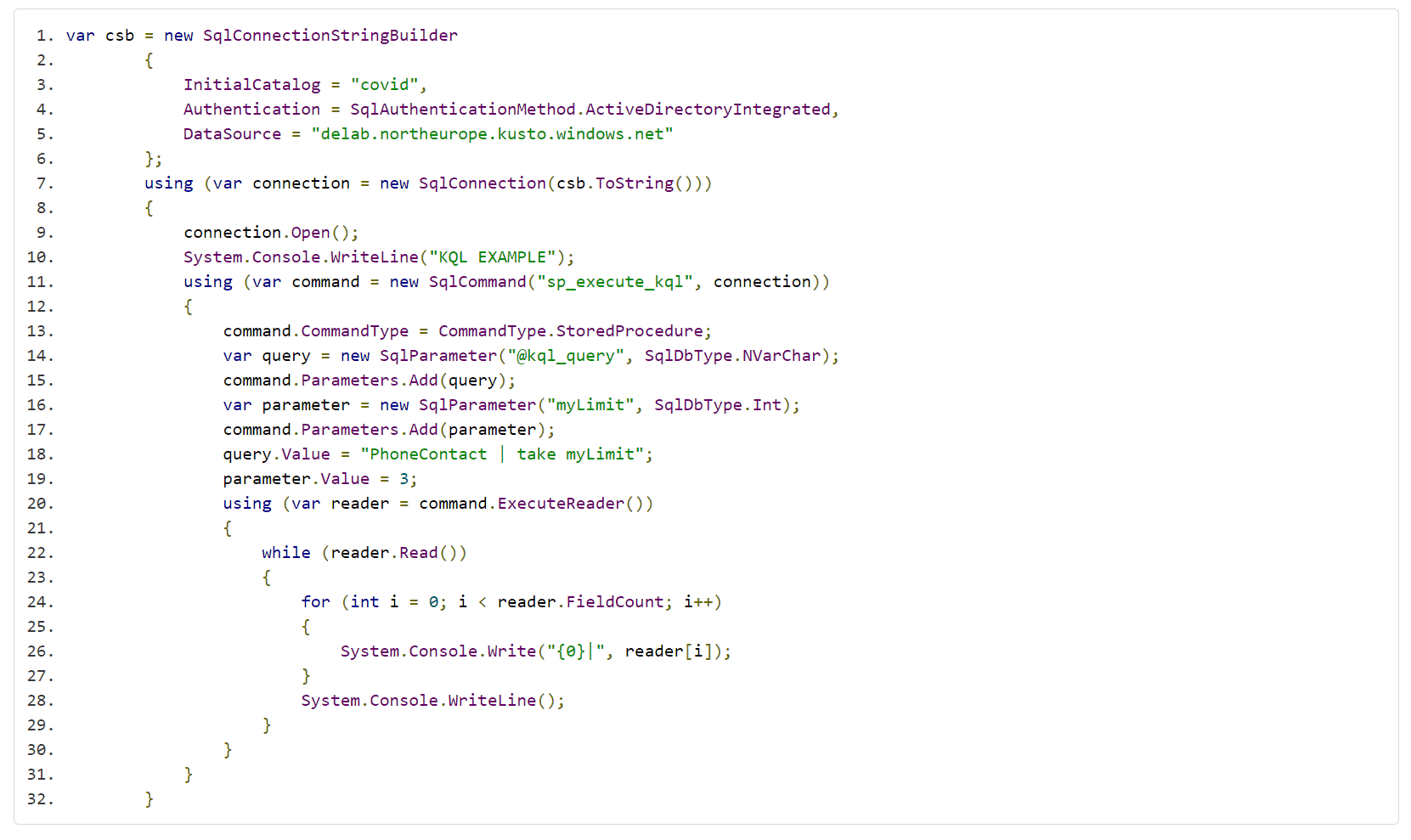

The access to the Data Explorer cluster is made possible with the SQL driver and the KQL code is executed via the sp_execute_kql procedure. As a result, such query can be made directly from the app when the data about infections have been loaded.

Processing the Data Stored in Azure Data Lake Storage

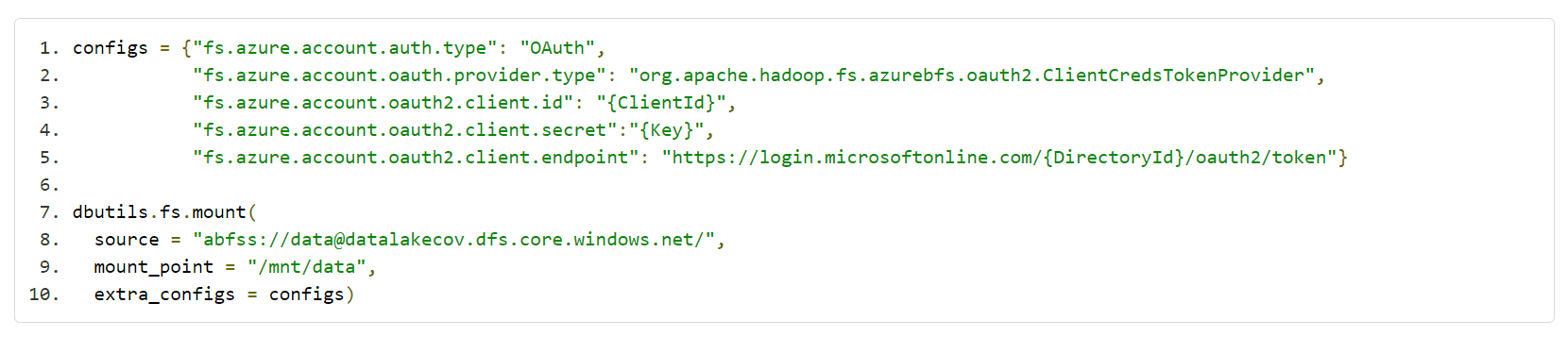

The data stored as files in Azure Data Lake Storage are processed with Spark (Azure Databricks), which is run on demand, e.g. after retrieving data about infections. To achieve this, several operations must be performed:

- create a dedicated Service Principal and give access to Azure Data Lake Storage (and the data container),

- mount Azure Data Lake so that it is available in Azure Databricks – you can use the following script (one-time operation),

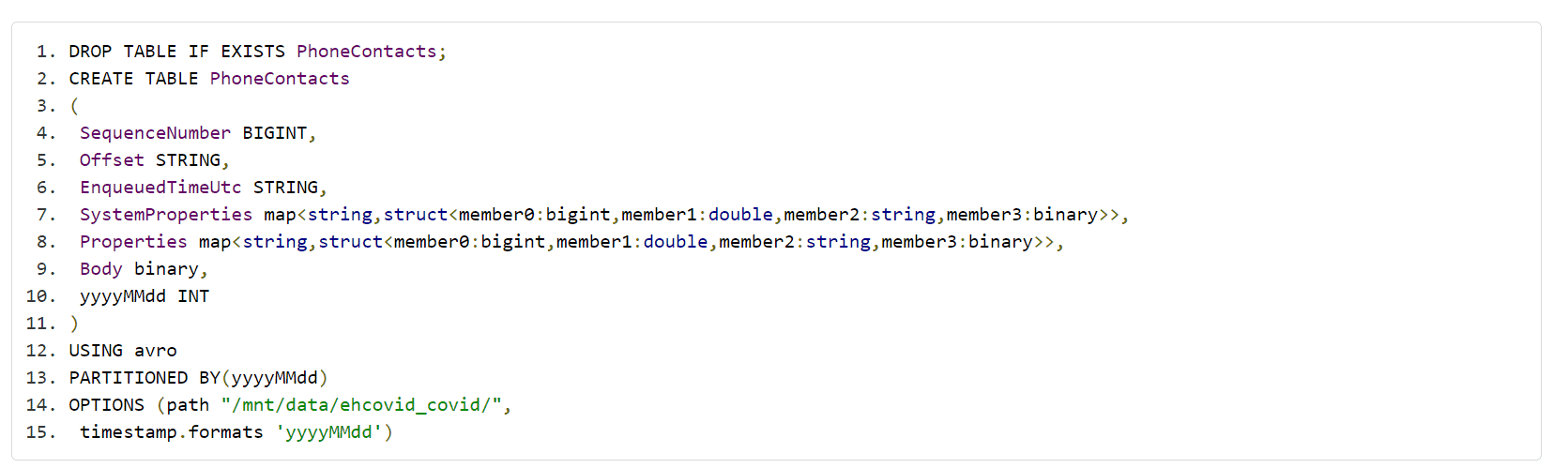

- map telemetry data to tables (Avro format),

- create a notebook for processing – it should refresh information on partitions

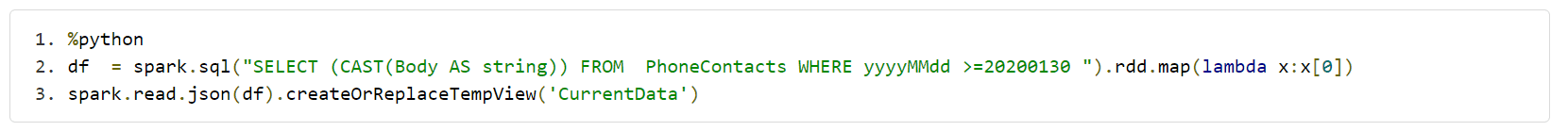

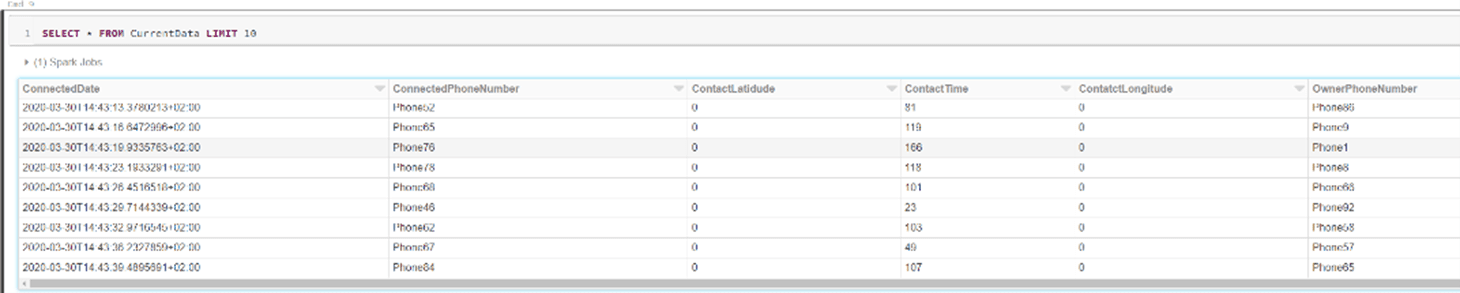

MSCK REPAIR TABLE PhoneContacts MSCK REPAIR TABLE PhoneContacts , - extract telemetry data from the Avro format (Body column in table PhoneContacts) for a given time interval (yyyyMMdd – data partitioning),

- save the results, e.g. to an SQL database,

- create a job to run on request (using a previously created notebook)

– generate an access token, - run the on-request job (creating a Spark cluster on demand) with Rest API.

POST: https://northeurope.azuredatabricks.net/api/2.0/jobs/run-now

Authentication Token: {Token}

Body: ID of the created job

Summary

The presented solutions are demonstrative, but they check the possibility of implementing a project based on the presented architecture. Both approaches – based either on Azure Data Explorer or on Azure Databricks – allow you to create a scalable solution to process hundreds of terabytes of data. Currently, the solution based on Spark seems to be more attractive in terms of costs; however, it is more complex.

As far as PoC itself is concerned, its implementation took about four hours and additional two or three hours to prepare the simulator.

Here you can find the link to the architecture of a similar solution created by Cloudera.