Does Docker make sense in 2021?

In early December 2020, the IT world was hit by the news that “Kubernetes 1.20 deprecated Docker”. So far, this means that Kubernetes is issuing a deprecation warning. Specifically, this refers to dockershim – I’ll elaborate on that later in the text. Docker support will be removed in version 1.22, which is planned for the second half of 2021. That’s why I think that the year 2021 is the beginning of Docker’s end.

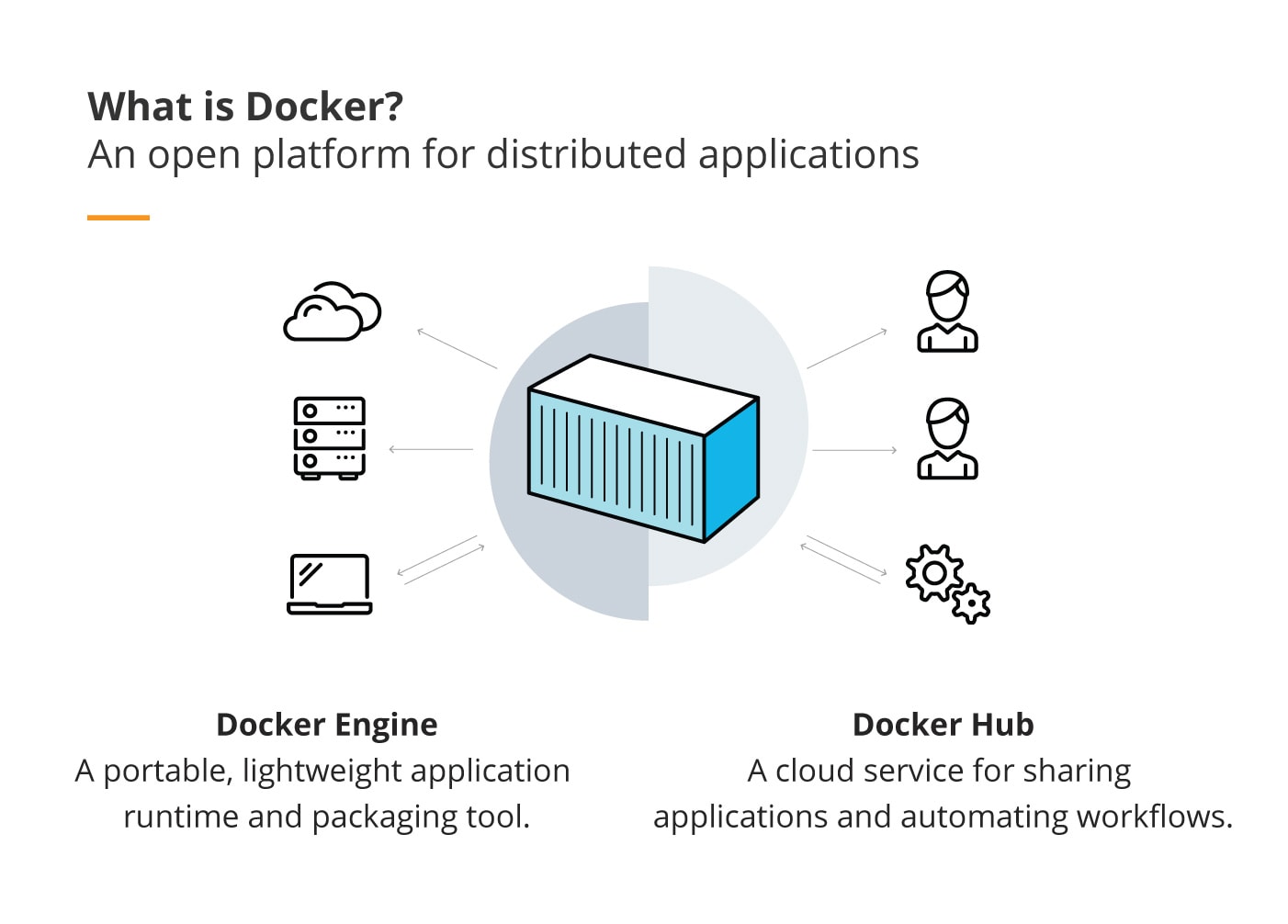

What are Docker and Kubernetes?

This allows using the same package on the developer’s laptop, in the test environment, and in production. So much for theory.

It is also responsible for the lifecycle of the containers and joining them into Pods. Compared do Docker, it operates on a higher level, as it controls many containers on many machines.

If the Docker container is an equivalent of a virtual machine, Kubernetes is like a hosting or cloud provider. Docker (or Docker Compose) helps run various processes, combine them in a network, and attribute storage within one computer. Kubernetes can do the same within a cluster composed of a couple of computers.

Kubernetes brings Docker down to the level of a component which runs the containers. Thanks to the introduction of the CRI standard (Container Runtime Interface), these components can be changed. At present, only containerd and cri-o are compatible with the CRI. Docker requires the dockershim adapter, which is precisely what the programmers supporting Kubernetes want to get rid of.

Why does Docker matter?

In the past few years, Docker has transformed from dotCloud’s side project into a billion-dollar business. Despite the 280 million USD venture capital funding, Docker, Inc. didn’t do well as an enterprise and was subsequently bought by Mirantis. The purchase amount was not published, which is quite curious. I guess they got a bargain.

Mirantis’s flagship product is Kubernetes-as-a-service – and so, they compete with VMWare and, obviously, with the cloud providers. Kubernetes is so important for the company that they wanted to maintain Docker Swarm for two years only, but they quickly backed out of that, surely pressurised to do so by their current clients. Actually, I know a company that has a large Docker Swarm installation and migrating to another solution would be a difficult affair for them.

What is Docker Swarm?

It’s a bit like Kubernetes which is as easy to use as the regular Docker. Of course, there’s more to that – nodes, replicas, grids – but still, the cluster view is really simplified in comparison with Kubernetes.

Does that mean that Mirantis bought a product which is competition for their flagship one? Yeah, in a way. Docker Swarm has already recovered from its childhood diseases (e.g. the bug of assigning duplicated IP addresses), so it looks like a reliable product for small teams. The problem is that small teams and small clusters make small money.

Besides, Docker Swarm is simply too simple. In our team, it took a single person to create and manage Docker Swarm clusters. Apart from the bugs that I’ve mentioned, there’s not much work with that. Large updates arrive along with Docker, which doesn’t happen too often, so that’s another problem gone.

What is the agenda of Mirantis (owner of Docker Enterprise)?

The question is: if Mirantis makes money on Kubernetes-as-a-service for enterprise clients, and Kubernetes removes Docker support, what is that all about? From my point of view, a company that profits from Kubernetes has no reason to invest in Docker once it is no longer supported by Kubernetes.

Mirantis takes care of the current enterprise customers

Later in December, Mirantis announced that they were not going to support dockershim (Docker’s adapter to the CRI interface) with Docker, Inc. They explained that their current customers used more complex Kubernetes installations, which were dependent on specific Docker Engine features. What does that change? The situation resembles that related to Docker Swarm. Mirantis’s technical debt will rise (more on that below).

These are only my private musings. I haven’t seen Mirantis’s contract with Docker, Inc., or their strategy. I rely on official press releases and my observation of the market. Trying to imagine what a big company might do based on your own experience is an interesting mental exercise, which allows you to look from a distance at corporations that produce the technologies you use. I do recommend that.

What does that mean?

Over the years, Docker Engine has grown and evolved into a modular architecture. Various implementations of components like logging have emerged. This gave room for standardising and simplifying the application architecture. It was enough for an app to log to Linux streams stdout oraz stderr, and Docker collected and stored logs locally. It also provided access to the interface in the form of docker logs commands to read those logs.

For developers, it’s very convenient to have a single tool to view app logs written in Java, Node, PHP, or other languages. IT Ops, who maintain the systems, find other things important as well: the guarantee that the logs won’t get lost, their retention, the speed with which they fill up disk space. This is a completely different set of problems, and Docker cannot solve them.

A single machine vs. a cluster

What works perfectly for a single machine might not be so great in a cluster. A good example here is docker service logs, which is an equivalent of the log viewer for Docker Swarm (the cluster orchestrating system for Docker). Unfortunately, in this case, the logs are not displayed in a chronological order, which might be caused by the time differences between the particular machines in the cluster.

The use of NTP may reduce this problem to a certain extent, but that’s not the best solution. In the case of log order, it’s better to use a central aggregator that can add a timestamp at the moment of receiving the log. This is a solution of a different level, although normally necessary in distributed systems such as clusters.

To explain the Docker’s incompatibility, I will use the Cynefin framework terminology. There is a problem with a distributed system, which is intrinsically complex, and the solutions applied are addressed to intrinsically complicated systems.

In other words: the solutions chosen by Docker might work for a single machine, but they are not suitable for clusters, which contain a number of machines.

Programmers

For programmers, technically, little changes. We are still going to build Docker images, as they are compatible with the OCI standard (Open Container Initiative). This means that any compatible CRI will be able to run these images, be it locally or in a cluster.

In my opinion, the most important changes refer to the way we think about containers. It’s time to abandon the analogy of a container as a “light virtual machine” and take the cloud-native applications into account. What’s crucial here is that one container is one process and that resources, just like files, are temporary.

On the other hand, it’s time to start perceiving a container as an instance of an application running in the cloud. We need to remember that there will be many copies of the app and that nobody knows on what machine a container will be used. In result, we cannot rely on local files, as the new instance of the container won’t have access to those saved by the previous one.

Another issue is the single process in a container. Managing the container lifetime, load balancing between the instances – these should be left to the orchestrating system (like Kubernetes). I’ve encountered a problem in database migration, where some of the containers didn’t get a new address, because PM2 ran in the container instead of the direct Node (process manager for Node) and restarting the container didn’t bring the desired effect.

If the target deployment environment is Kubernetes, I think it’s also a good idea to get interested in solutions that make it possible to comfortably run apps on a local Kubernetes cluster. I’m referring here to tools such as Skaffold (from Google), Draft (Microsoft), Tilt, or KubeVela.

Docker Compose works fine if the target environment is Docker Swarm, because they use the same file format of YAML – but that’s a different story. I’m talking about a rapidly changing market here and I’m going to find something for me in it.

SREs / IT Ops

For SREs and IT Ops (the people who maintain the infrastructure), the things get more complicated if Docker Engine is used in Kubernetes. It might be enough to use containerd as the CRI implementation. In this case, it all depends on how many Docker Engine dependencies have leaked to the infrastructure.

Docker-in-Docker(DinD) is a good example here. It is used to build images on CI servers (Continuous Integration). As early as 2015, DinD for CI was considered a bad practice, but before that could become common knowledge, it turned out that DinD was a sort of quick win in the CI environment in a cluster.

Of course, Mirantis will listen to any complaints concerning the dependence of the complicated CI system on Docker-in-Docker or another legacy. After all, they declared they were going to support dockershim and they make money on that. I wonder, though, how much money it takes to get rid of such a problem.

I’m interested in this personally, because I’m working on a complex CI, which uses DinD to build Docker images. I realise that 2021 is a year of preparing the transition to a different CRI or dockershim. I’m afraid that the latter option will mean throwing yourself at the mercy of Mirantis, which may be a strategic risk. We’ll see.

Consultants

For consultants, this kind of transition is great news. After carefree years of using Docker in developer teams, it’s time for tidying up, teaching and applying good practices. All with the objective of smooth transitioning to more restrictive environments, like Kubernetes.

Personally, after six years of using Docker (including three years with Swarm), I’m learning new runtimes, orchestrating systems, and cluster management. These trends are now emerging within the scope of interest of corporations other than the tech giants.

On the other hand, companies such as Mirantis or VMWare have a vital interest in implementing and supporting clusters, charging a lot for that. The same applies to the cloud providers: AWS, Azure, or GCP, which offers hosted Kubernetes. Suffice to say that the independent provider Linode has offered the Linode Kubernetes Engine (LKE) since 2019.

Summary

So, should you worry about the fact the Kubernetes deprecates Docker? You should… and you shouldn’t. I believe that the cloud business will grow and more and more companies will migrate to the cloud. In this environment, application containers and horizontal scaling (for multiple machines) are a natural direction. Perhaps we’ll just have to live with the complications resulting from the use of clusters.

If horizontal scaling is inevitable, Kubernetes will make a lot of things easier, even though it seems complicated in itself. In fact, it is extended because the problem it solves (allocating resources in a cluster) is essentially complex. In this case, Kubernetes provides basic tools and terminology to deal with this complexity.

With time, solutions simplifying Kubernetes will surely turn up. All the cloud providers already offer the service of hosted Kubernetes cluster, which releases IT Ops from many operational tasks. Thanks to the fact that Kubernetes configuration takes the form of declarative YAML files, it will be possible to construct tools that will enable you to kind of click your way through the clusters. I’d even say that Kubernetes YAML will be to clusters what HTML is to the World Wide Web.

Do you want to know how Cloud can help your business develop?