Major Big Data challenges and issues

Big Data is one of the most significant elements of digital transformation and nothing seems to change that. Today, let's look at Big Data challenges and issues to face!

Information is the gold of the 21st century

With the information processing market growing exponentially, we live in a Big Data era. Our times are also called by experts and trendwatchers “the gold rush of the 21st century“.

Why is information regarded as the new gold?

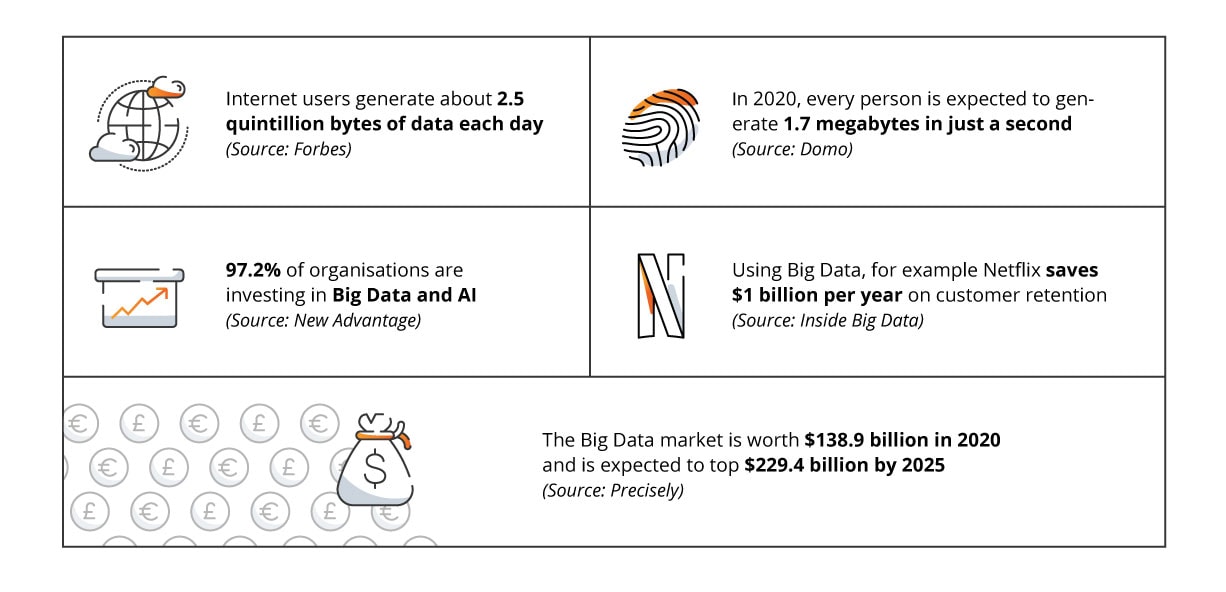

Let the numbers speak for themselves:

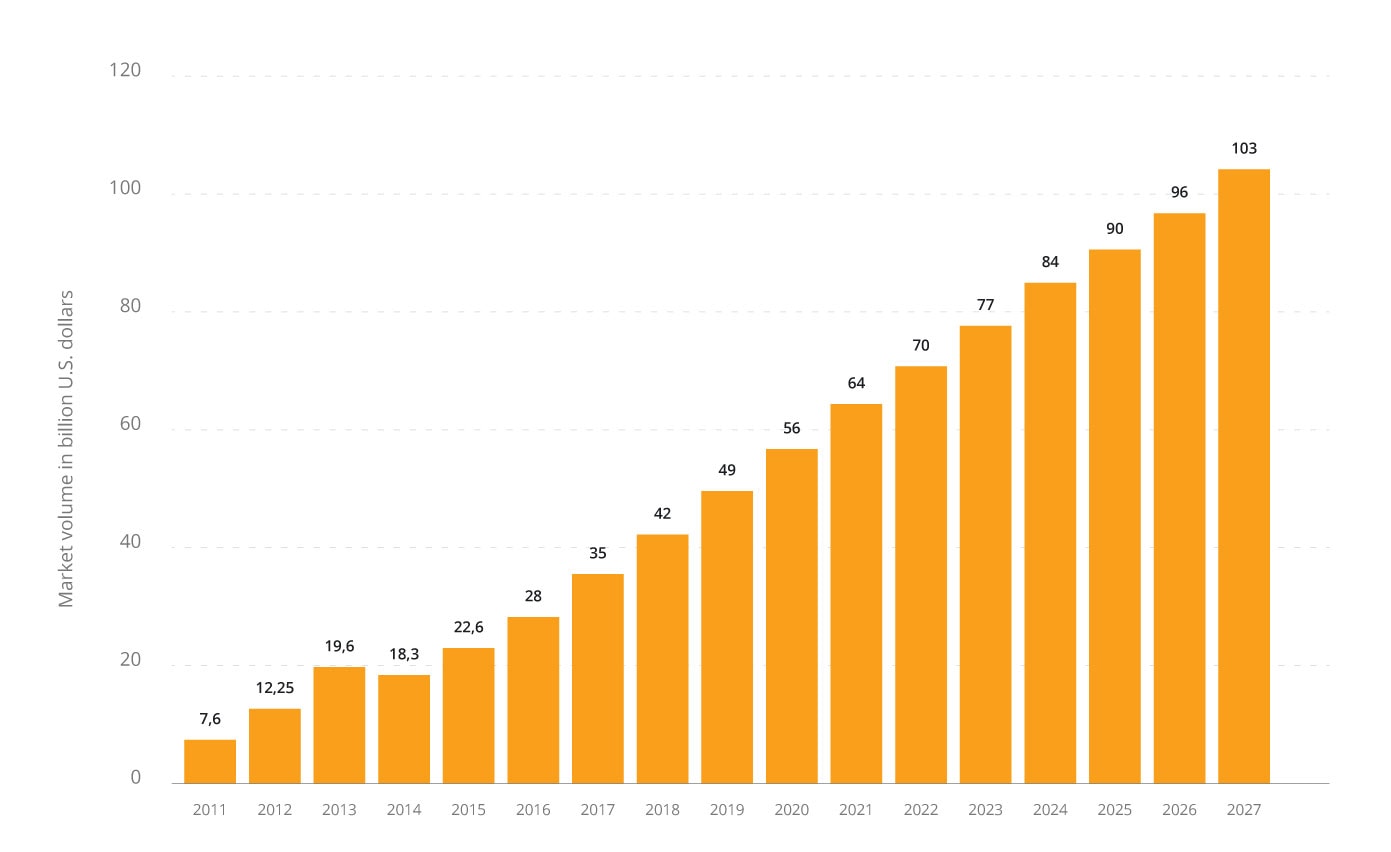

By 2027, the global Big Data market is projected to reach $103 billion.

Every second massive amount of priceless information come from the Internet, social networks, text messages or smart devices equipped with IoT sensors. Industries that benefit the most from the use of Big Data are healthcare, banking, media, retail, transport, manufacturing, energy, and utilities.

Big Data is powering the world and forcing the organisations to seek consultation from Big Data professionals.

Understanding the 4 V’s Of Big Data

Every business, regardless of its size, can benefit from the effective use of certain information. With the rapid growth of Big Data market, technologies created to gather, categorise, analyse, store and use Big Data will also be developing.

To start on the Big Data topic, let us quote its most up-to-date definition by Gartner:

Big Data is high-volume, high-velocity and/or high-variety information assets that demand cost-effective, innovative forms of information processing that enable enhanced insight, decision making, and process automation.Gartner

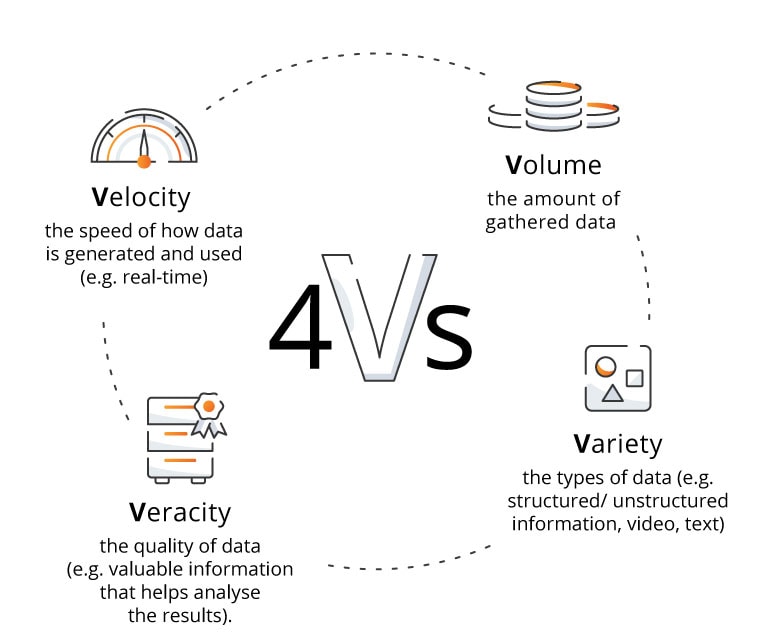

Big Data is defined by four characteristics – the 4V’s of Big Data:

Volume, meaning the sheer amount of data, which can range from terabytes to petabytes and beyond.

Velocity representing the speed at which data is generated, collected, and processed.

Variety which refers to the diverse types of data that are encountered in Big Data scenarios.

Veracity meaning the quality and reliability of the data.

Big Data: what it means for today’s businesses

For modern business, it is the quality not the quantity of gathered data that matters.

Nowadays, we can observe a specific shift among companies from being only data-generating to being data-powered.

Let us see how the effective use of Big Data can leverage business.

Better decision making

Proper technological analysis of Big Data can bring insights that were previously almost impossible to uncover. In marketing and e-commerce, we can study customers’ behaviour in detail, gather real-time information about their actions, adjust communication and personalise items to their specific needs.

Thanks to information gathered from different channels, we can understand our customers’ behaviour and visualise their journeys.

It helps increase customer satisfaction and engagement, but also leads to better decision-making within business.

More efficient operation

The concept of lean management established decades ago by Toyota is now powered with data. Specific real-time information can make internal processes more effective and simply smart.

How? With smart labels and different sensors, we can collect large amounts of streaming data used in analysis and build predictive models. They help optimise supply chains, avoid failures, predict prices and create future plans.

Product & service improvement

Technology lets us observe and analyse certain company’s data trends from many different channels. Opinions from social media, reviews, websites, or mobile apps build the whole picture. We see what works and detect repetitive doubts or complaints.

With a complex set of information we can improve the way our products or services work, as well as we can create a better customer experience. It is extremely important nowadays as 80% of customers say the experience a company provides is as important as its products and services.

Smart reaction to changes

The 2020 situation with COVID-19 has shown that we need to be prepared for unexpected incidents. The coronavirus pandemic not only impacts the economy, but also rapidly changes business patterns.

Big Data collected in this unusual time helped quickly discover new needs, create new strategies and offer products or services in the upgraded form. It is a valuable lesson for all.

You can read more about Big Data here:

- How Big Data revolutionises the financial industry?

- How Big Data science is changing the finance industry?

- How Data Science helps businesses?

The major challenges of Big Data

Big Data presents several major challenges, and addressing them is crucial for organisations keen to harness the full potential of their data.

Some most important Big Data challenges include:

Data security and privacy concerns

Data security and privacy issues have been a serious issue for a long time now. Growing volumes of information need to be protected from intrusions as well as from cyber attacks.

The problem with security has several reasons:

security skill gap (according to Cybercrime Magazine, unfilled cybersecurity positions will reach 3.5 million by 2021);

fast evolution of cyberattacks (hackers are continually making their threats more complex);

not following security standards properly (many organisations still ignore data security standards or use them selectively).

To maintain the company’s reputation, many C-level executives and managers regard the whole area of data privacy as their top priority, along with security and data ethics.

Data integration and processing

Big Data combines a broad array of data sources, both online and offline. Integrating all information as an architectural overlay across all enterprise systems is essential. It helps see the broad picture, better understand the nature of gathered data and discover insights useful in decision making and planning.

Big Data sources integration will be an important step to take by many companies. It may involve the use of Big Data technologies, such as distributed computing frameworks, data integration tools, real-time streaming platforms and specialised analytics software.

Additionally, organisations need data engineers, data scientists, and data analysts with expertise in these technologies to design and implement effective data integration and processing pipelines.

Analytical complexities

Analytical processes involve extracting meaningful insights and patterns from large and complex datasets. Some key challenges related to analytical complexities in Big Data include dimensionality, data gathering, data preprocessing, algorithm selection, computational resources, data storage and real-time analytics.

Addressing these challenges often requires a combination of domain expertise, advanced analytical techniques, Big Data tools and technologies. Data scientists and analysts play a crucial role in navigating these complexities to derive valuable insights.

Larger volume and Cloud Migration

The number of data grows enormously with time.

IDC predicts that the Global Datasphere will grow from 33 zettabytes in 2018 to 175 Zettabytes by 2025.

Such rapid growth is powered by both: the increasing number of internet users taking more actions online (e.g. working, banking, shopping, social networking) and billions of connected smart devices and IoT systems.

That means not only smartphones connected to computers or smartwatches but also larger concepts of smart buildings, smart transportation, or even smart cities.

Such huge volumes require an adequate database to store and process information. Until recently, gathered data was kept in open-source ecosystems, such as Hadoop and NoSQL.

Nowadays, more and more companies decide to switch to cloud solutions that provide agility, scalability, and ease of use. The third decade of the 21st century may belong to hybrid and multi-cloud environments.

Scalability issues

Big Data systems must scale to accommodate growing data volumes and increased processing demands. Scalability and elasticity challenges include both vertical scaling (adding more resources to a single machine) and horizontal scaling (adding more machines to a cluster).

To address those issues organisations must use distributed computing frameworks, cloud computing services, and containerisation technologies like Kubernetes.

Compliance and governance

Compliance and governance are critical Big Data challenges. When organisations collect, process, and store vast amounts of data, they must navigate a complex landscape of regulations, security concerns, and ethical considerations.

Key challenges to take into consideration include data privacy regulations, data security, data retention policies, data provenance, consent management and cross-border data transfer.

To address these challenges, organisations often need dedicated data governance teams or officers who can develop and enforce governance policies.

Additionally, the use of data governance tools and technologies can help automate compliance monitoring and reporting. Regular training and education for employees on data privacy and security best practices are also essential components of an effective data governance strategy.

Increased need for Data Scientists and outsourcing services

As the volume and variety of gathered data are growing, the need for best-fitted systems and data specialists is increasing, too. A lot of companies face a lack of competent and experienced staff or have to deal with software that does not work properly.

One of the solutions that gain popularity is software outsourcing. Even if a company is not ready to create a software team in-house or hire high-class Data Scientists, all the tough work can be done outside by experienced specialists and their software teams.

It’s important to remember that the end goal is always to strike a balance that optimally meets the organisation’s data demands and constraints. If you feel you would like to know more about the solution, speak to an outsourcing company that may help you make the right decision.

Technology selection

Another important aspect of Big Data challenges is technology selection. The choice of technology stack can significantly impact the efficiency, scalability, and cost-effectiveness of data processing and analytics. Addressing this issue often involves conducting a thorough needs analysis, creating a well-defined technology roadmap, and piloting potential solutions before making a final selection. It’s also important to stay up-to-date with developments in the rapidly evolving Big Data ecosystem and be prepared to adapt technology choices as needed to meet changing requirements.

Cost considerations

Last but not least, organisations must look at cost considerations related to Big Data projects. Building and maintaining Big Data infrastructure can be expensive, so organisations need to carefully manage costs associated with hardware, software, and personnel to ensure a positive return on investment.

A good way of going about it is to conduct a thorough cost analysis and create a clear budget for all Big Data projects.

Also, exploring cost-effective alternatives like open-source software and cloud migration can help mitigate some of the financial challenges associated with Big Data.

The future of Big Data challenges landscape

The latest predictions bring a lot to look forward to. We can expect a sharper focus on data governance as many companies want to improve the use of data, as well as the privacy and cybersecurity issues. Cloud computing is a crucial matter in terms of Big Data, as companies want to deliver their products and services faster than ever. To do that, they will adopt modern microservices architectures based on the latest DevOps methodologies.

Organisations will continue to apply Machine Learning, Artificial Intelligence and Natural Language Processing to their Big Data platforms to make faster decisions and recognise trends.

Public and private clouds are expected to exist together. Thanks to 5G, multi-cloud IT strategies and modern hybrid cloud architectures enterprises can have better data management, real-time visibility and security of gathered information.

In next years data will also become fast and actionable. Fast data concept allows processing in real-time streams. It means information is analysed quickly and brings instant value like faster decision-making process and immediate action-taking. As IDC predicts, nearly 30% of the global data will be real-time by 2025.

Conclusion: crafting a strategic approach to Big Data challenges

Although with new solutions come new challenges, Big Data technology will continue to bring great value. Information from various sources helps see the bigger picture. Provided with specific insights, we can simply make better decisions, know our customers better and understand the details of different, often complicated processes. Big Data lets us follow consumer trends, or even create new ones.

More companies will become aware that they need to make use of actionable data. They will need a new, professional approach, also coming from the outside software providers.

Keen to speak about your Big Data projects and needs to a team of professionals? Do keep in touch – we will be happy to help you no matter what stage of the project you’re at.