ML in PL 2022: what we learned during the conference?

The recent ML in PL 2022 conference (organised by ML in PL Association) was a great occasion to look at the machine learning landscape in Poland and broader. Here is more!

ML in PL 2022 conference

ML in PL conference is held annually since 2017. At the beginning it was organised at the Faculty of Mathematics, Informatics and Mechanics of the University of Warsaw, but during the pandemic it was moved to a virtual platform. This year it came back to its original location, after two years of existing just in virtual space.

The main aims of the conference (and of the ML in PL Association in general) are to:

- Build a strong local community of ML researchers, practitioners, and enthusiasts at various levels of their careers,

- Support new generations of students with interests in ML and promote early research activity,

- Foster the exchange of knowledge in ML,

- Promote business engagement in science,

- Support international collaboration in ML,

- Increase public understanding of ML.

This year’s conference lasted for three days and was packed with knowledge, networking, and entertainment.

ML in PL conference – agenda

It all started with a students’ day, where one could listen to eight presentations done by students or take part in the NVIDIA’s workshops on mechanics of deep learning.

The core part included:

- 9 key-note lectures,

- 3 discussion panels,

- 9 contributed talks,

- 4 sponsors’ talks,

- a poster session with 34 posters.

Among many topics covered were learning with positive and unlabelled data, computer vision, probabilistic & auto ML, deep learning, reinforcement learning, NLP, science-related ML, probabilistic neural networks and consolidated learning. Besides lectures, there were also multiple sponsors’ booths and a conference party, giving immense networking possibilities.

The conference was so rich in topics, lectures, and meetings that it is impossible to cover all of them. That is why I selected four which in my opinion were the most inspiring and interesting ones. Here they are!

Can you transfer the best software engineering practices to machine learning code?

The short answer is you can, and you should. And you can even get a really nice assistance with that! This assistance is called Kedro. Kedro is an open-sourced Python framework for creating maintainable and modular machine learning code. It was presented by Dominika Kampa and her colleagues from QuantumBlack AI by McKinsey.

One of its most powerful features is the pipeline visualisation. The ML code can be very complex and maintaining it as well as explaining it to business is often too much. If one is able to represent the code as a flow with clear input, output, parameters, dependencies and layers, then it’s a lot easier to grasp the entire solution as well as its bits. I recommend going through a demo, where you can check out how the visualisation works in practice.

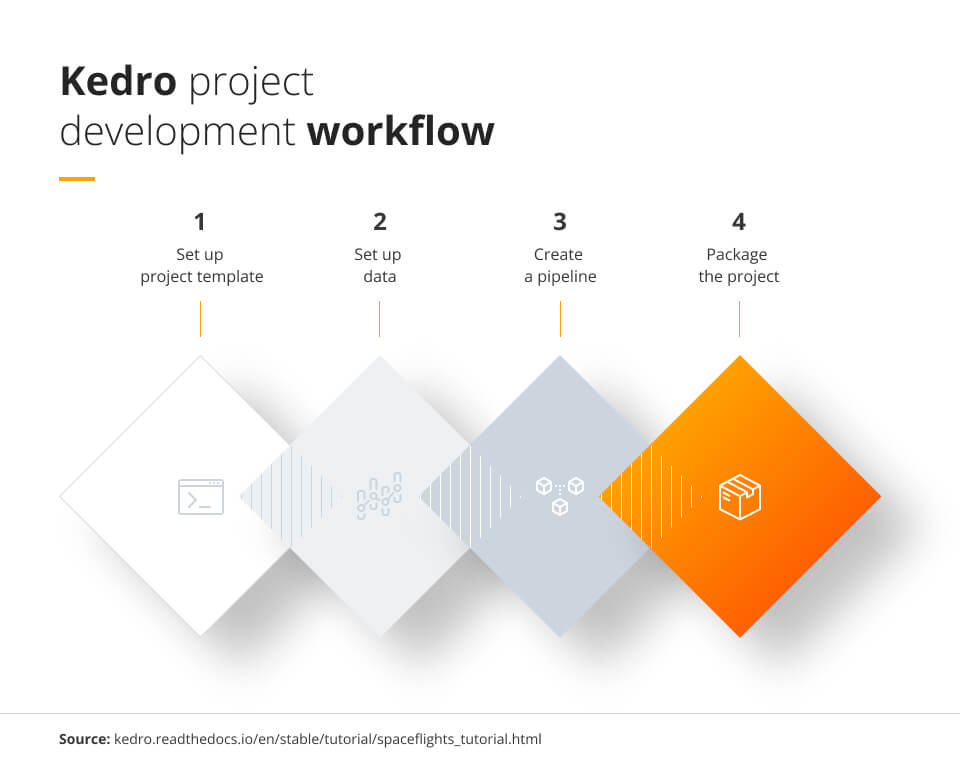

One of the technical underlying aspects of the visualisation is the project template. This is how you start the project – by defining directory structure. Afterwards you add the data, create a pipeline with the use of functions and finally package the project by building documentation and preparing it for distribution.

Another interesting feature is the experiment tracking. The results together with environment description of all your experiments are stored in one place with a possibility to easily go through them. The only thing you need to do is add a few lines of code.

Does human-AI synergy exist?

One of the most inspiring and enthusiastic talks was given by Petar Veličković, Staff Research Scientist at DeepMind, Affiliated Lecturer at University of Cambridge and an Associate of Clare Hall, Cambridge. His main research interest is geometric deep learning, particularly graph representation learning. This topic is recently becoming popular, both in applications and research. Graphs enable modelling complex relationships and interdependencies between the objects. They find many applications from social science, through logistics to chemistry and many more. Combined with machine learning, they demonstrate ground-breaking achievements, mainly due to their great expressive power.

Among the most renowned success-stories of applying Graph Neural Networks (GNN), Petar mentioned Halicin antibiotic discovery by MIT and Google Maps expected time of arrival optimisation by DeepMind, delivered with Petar’s contribution.

An interesting question is whether we can also utilise GNNs in abstract domains such as pure mathematics? Together with a group of mathematicians, Petar checked it for a long-standing open conjecture (40 years without a significant progress!) from Representation Theory. The scientists wanted to understand a relationship between two objects, where one of them could be represented as directed graph – ideal to utilise GNNs. The method chosen allowed them to analyse and interpret the outputs with the use of attribution techniques. Such techniques help to understand what features or structures are relevant to the prediction. The group managed to discover two important structures, which finally led to a mathematical proof.

Their work proved that AI can inspire and assist humans, even in a very abstract domain, because it augments and guides the domain search. Empowering human intuition, rather than providing an explicit answer, can have a very powerful impact in the end.

Do machines see like humans?

Artificial neural network is an example of an algorithm highly inspired by nature, i.e., biological neural networks. It loosely models the work of neurons in a biological brain. This method turned out to be a very powerful tool which can solve various problems, from understanding text to interpreting speech and recognising images. But does the human-like representation strictly imply that machines cognition and human cognition pay attention to the same characteristics of an object?

Matthias Bethge, Professor of Computational Neuroscience and Machine Learning at the University of Tübingen and director of the Tübingen AI Center, decided to verify some inductive priors in computer vision. He focused on misalignment between human and machine decision boundaries, which basically means he examined images which were easy to recognise by humans but difficult for convolutional neural networks (CNNs) and vice versa.

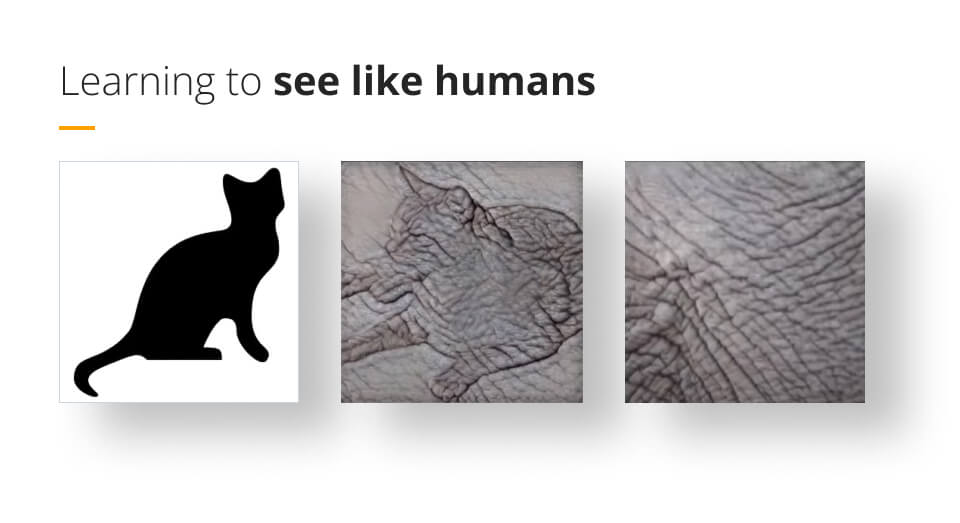

One of the inductive priors that was researched was texture-based classification. The scientist checked the prediction performance of texturized images (generated from original texture synthesis) and benchmarked it with original image predictions. It turned out that this transformation didn’t deteriorate the results. As long as texture is the same as original, the algorithm performed well. Hence, he decided to go a step further and constructed a dataset with elements that combined texture of one class with a shape of a different class (for example representing shape of a cat on an elephant’s texture).

He then compared a fraction of objects correctly classified by shape and a fraction of objects correctly classified by texture. It turned out that humans rely almost exclusively on shape, while CNNs were more biased towards using texture information. If CNNs rely strongly on texture, this implies they are also more vulnerable to texture changes. Hence, we can improve the performance of machines by feeding a model with a training set augmented by randomised textures (also generated with the use of NNs).

What Matthias Bethge has shown is that we can move closer to the intended solution by comparing machine cognition with human cognition. In his work, he researched many other approaches which make machine decision-making more human-like. He constantly proves that crossover between neuroscience and machine learning can significantly empower the latter one.

What can you infer about society by analysing ML models bias?

During the poster session, there was a poster which particularly attracted my attention. It was a poster co-authored by Adam Zadrożny from National Centre for Nuclear Research and University of Warsaw, and Marianna Zadrożna from Academy of Fine Arts. The researchers examined text-to-image models, trained on the datasets of images and captions crawled from the Internet. They analysed results of DALL-E mini model, which in contrast to DALL-E and DALL-E 2 is more prone to pick up bias from the original datasets.

Bias can be seen as a drawback of the model, but it can turn into a research tool for a much broader topic, which are misconceptions consolidated in society. The researchers generated images based on prompts linked to health. What they discovered was that for example, the words ‘autistic child’ returned only pictures of boys, as if girls didn’t suffer from autism. They also checked prompt ‘person with depression’, which returned pictures of young adults. This made them think whether in our collective imagination we take into account that depression can also occur among old people?

These are just two examples, but you can find more of them by checking results of DALL-E mini on your own.

Is ML in PL conference worth attending?

Definitely yes! I’d recommend this event to everyone interested in machine learning. It provides a lot of inspiring talks, allows getting to know state-of-the-art techniques, and is a great occasion to exchange thoughts with other community members. The thing I like most about this event is that it strongly expands our horizons.

See you next year!

________________________________

ABOUT THE AUTHOR

Maths graduate, since 2020 working as a Machine Learning Engineer, with decent data analytics past. She is responsible for evaluating, designing, and implementing AI solutions. Aleksandra is an MLOps enthusiast and tries to convince the business of its importance while working at any project.

Passionate about the culture of the Middle East and senior gardener after hours.