The future of natural language processing

Currently, our world revolves around automation. Email filters, voice assistants, predictive text, search results, data and text analytics. These automation fields wouldn't be possible if it weren't for Artificial Intelligence and one of its major branches, Natural Language Processing (NLP).

While NLP has quite a long history of research beginning back in 1950, its numerous uses have emerged only recently. With the introduction of Google as the leading search engine, our world being more and more digitalised, and us being increasingly busy, NLP has crept into our lives almost unnoticed by people. Still, this is what’s behind the multiple conveniences in our day-to-day existence.

Similarly to AI specialists, NLP researchers and scientists are trying to incorporate this technology into as many aspects as possible. The future seems bright for Natural Language Processing, and with the dynamically evolving language and technology, it will be utilised in ever new fields of science and business.

What is Natural Language Processing (NLP)?

In essence, Natural Language Processing is all about mimicking and interpreting the complexity of our natural, spoken, conversational language. It’s a field of computational linguistics, which is a relatively new science. While this seems like a simple task, it’s something that researchers have been scratching their heads about for almost 70 years. See, language is incredibly complicated, especially the spoken kind. Things like sarcasm, context, emotions, neologisms, slang, and the meaning that connects it all are all extremely tough to index, map, and, ultimately, analyse.

Still, with tremendous amounts of data available at our fingertips, NLP has become far easier. The more data you analyse, the better the algorithms will be. The growth of NLP is accelerated even more due to the constant advances in processing power. Even though NLP has grown significantly since its humble beginnings, industry experts say that its implementation still remains one of the biggest big data challenges of 2021.

Before putting NLP into use, you’ll need data. By using information retrieval software, you can scrape large portions of the internet.

NLP consists of two fundamental tasks: syntax analysis and semantic analysis.

Syntax analysis

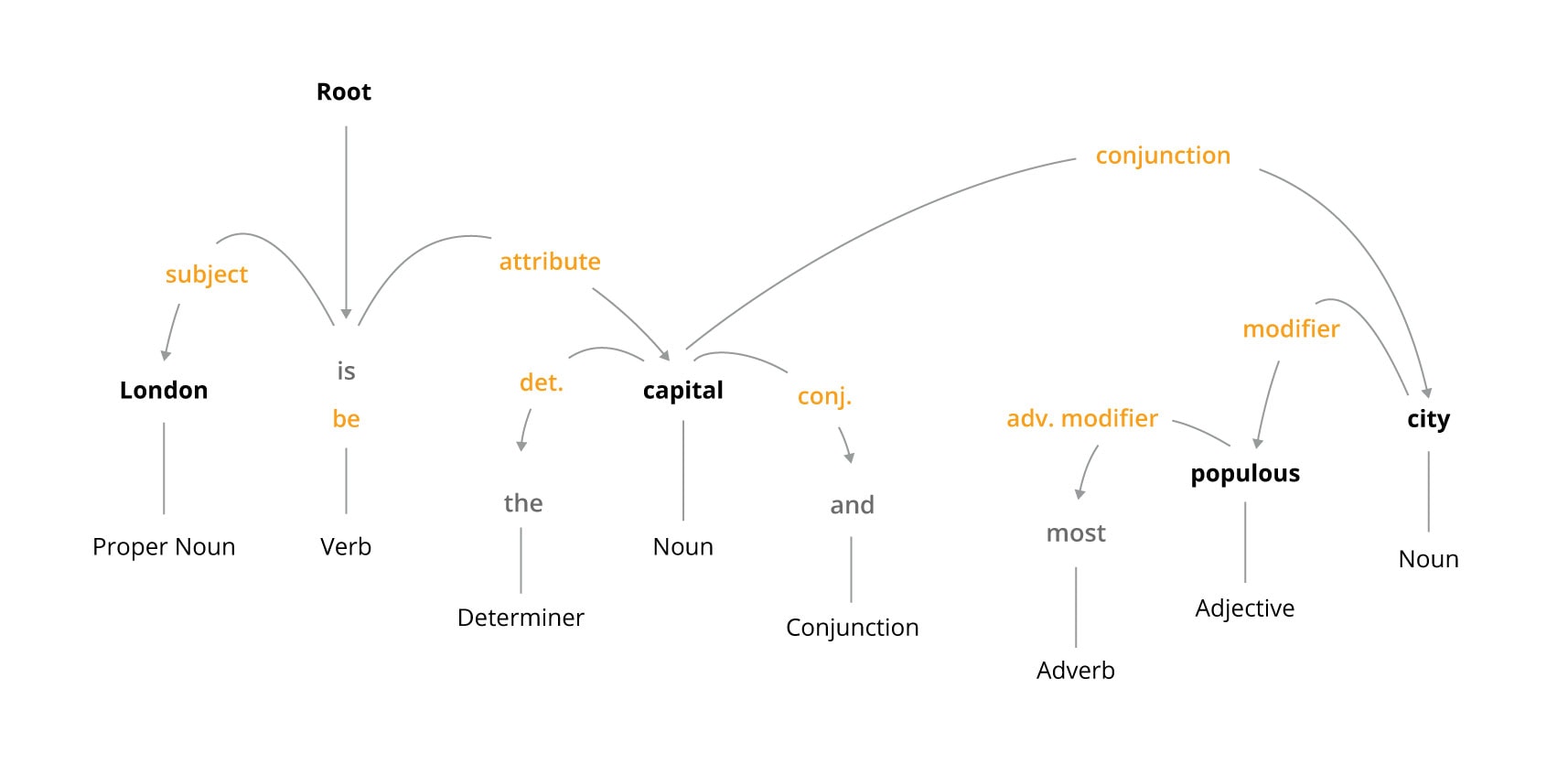

Syntax analysis is used to establish the meaning by looking at the grammar behind a sentence. Also called parsing, this is the process of structuring the text using grammatical conventions of language. Essentially, it consists of the analysis of sentences by splitting them into groups of words and phrases that create a correct sentence.

This doesn’t account for the fact that the sentences can be meaningless, which is the point where semantic analysis comes with a helping hand.

Semantic analysis

Our understanding of language is based on the years of listening to it and knowing the context and meaning. Computers operate using various programming languages, in which the rules for semantics are pretty much set in stone. Now, human language is different, as it is dynamic. With the invention of machine learning algorithms, computers became able to understand the meaning and logic behind our utterances. At least to a certain degree.

While syntax analysis is far easier with the available lexicons and established rules, semantic analysis is a much tougher task for the machines. Meaning within human languages is fluid, and it depends on the context in many situations. For example, Google is getting better and better at understanding the search intent behind a query entered into the engine. Still, it’s not perfect. I bet that you’ve encountered a situation where you entered a specific query and still didn’t get what you were looking for. NLP helps with that to a great degree, though neural networks can only get so accurate.

How does NLP work?

There are numerous techniques associated with Natural Language Processing. Each of them is different, though they can provide you with invaluable insights concerning your data when used together. These techniques also reduce the time it takes to process data by removing and simplifying particular elements of sentences.

Sentiment Analysis or Opinion Mining

Sentiment analysis is the investigation of statements in terms of their — as the name suggests —sentiment. In essence, it consists of determining whether a portion of text has a positive, negative, or neutral attitude towards a certain topic.

Now, the more sophisticated algorithms are able to discern the emotions behind the statement. Sadness, anger, happiness, anxiety, negativity — strong feelings can be recognised. It’s widely used in marketing to discover the attitude towards products, events, people, brands, etc. Data science services are keen on the development of sentiment analysis, as it’s one of the most popular NLP use cases.

Parsing

Parsing is all about splitting a sentence into its components to find out its meaning. By looking into relationships between certain words, algorithms are able to establish exactly what their structure is.

Stemming and Lemmatisation

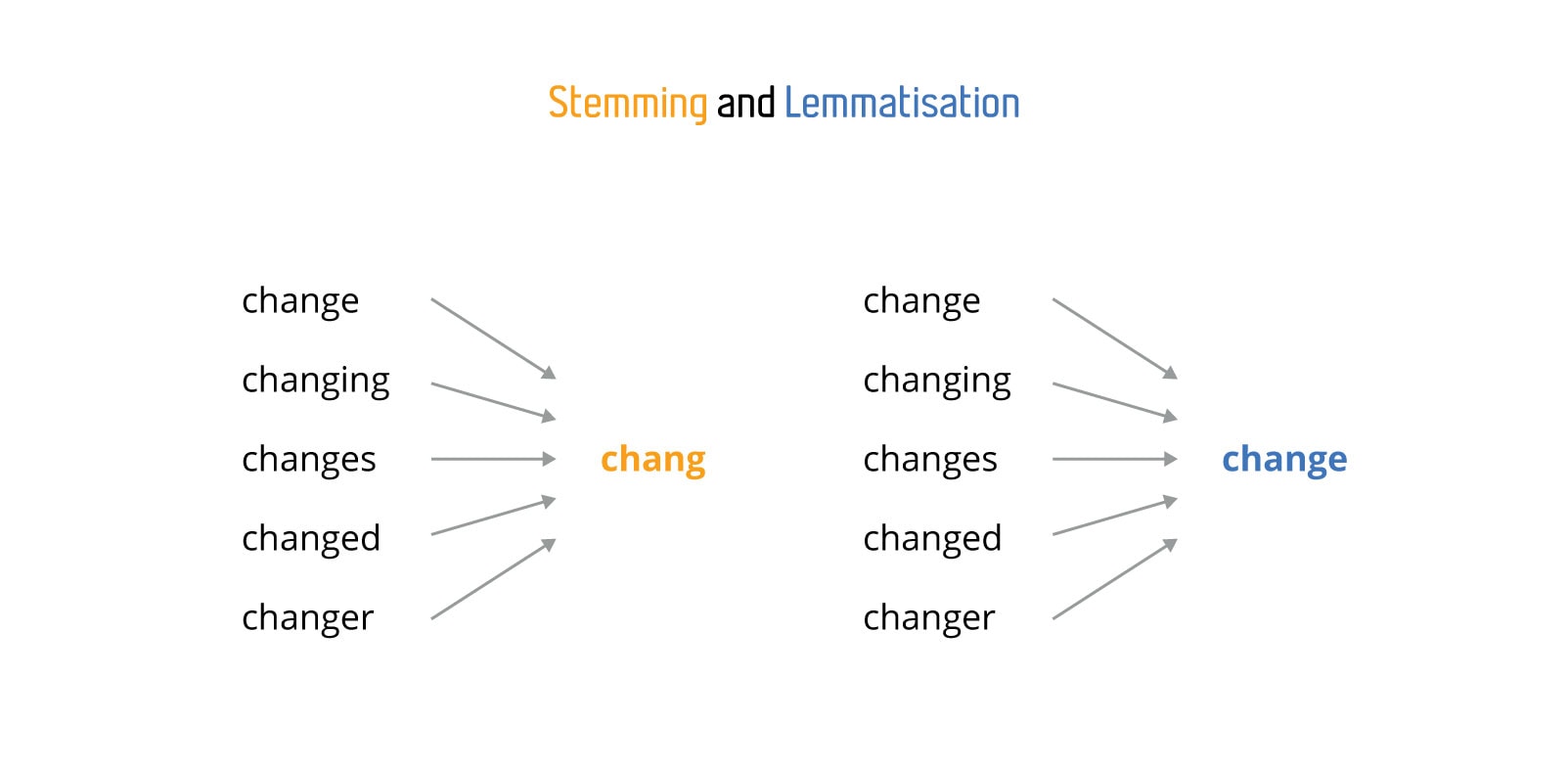

Stemming is a method of reducing the usage of processing power, thus shortening the analysis time. Stemming converts words into their roots, e.g. “buying” will be converted to “buy.” Consider the sentences “I’ll be buying some shoes,” and “I will buy some shoes.” They have the same meaning, so the algorithm reduces the first infinitive one to its stem, decreasing the amount of data needed to analyse.

Lemmatisation differs a bit from stemming in that it reduces words into their most basic forms.

Imagine that you’re looking into terabytes of information to gather insights. Such situations will occur fairly frequently, and the amount of time you save is significant.

Named Entity Recognition

Named Entity Recognition (NER) is the process of matching named entities with pre-defined categories. It consists of first detecting the named entity and then simply assigning a category to it. Some of the most widely-used classifications include people, companies, time, and locations. NER is helpful when you need an overview of immense amounts of writing.

Relationship Extraction

The Relationship Extraction process takes named entities from a text and then recognises the relationship between them. For example, you could ask Google, “who is the chairman of Intel,” and the algorithm, using RE, would associate the relationship between “chairman” and “Intel,” providing you with the correct answer. RE could also be used when you analyse large portions of customer service queries. It allows for the detection of particular relationships and categorizes them in terms of priority. This, in turn, facilitates your support tasks and improves customer experience.

Topic Modeling and Classification

Topic Modeling is most commonly used to cluster keywords into groups based on their patterns and similar expressions. It’s a technique that is entirely automatic and unsupervised, meaning that it doesn’t require pre-defined conditions and human ability. On the other hand, Topic Classification needs you to provide the algorithm with a set of topics within the text prior to the analysis. While modelling is more convenient, it doesn’t give you as accurate results as classification does.

Stop Words Removal

One of the essential elements of NLP, Stop Words Removal gets rid of words that provide you with little semantic value. Usually, it removes prepositions and conjunctions, but also words like “is,” “my,” “I,” etc.

NLP uses within data science

NLP has a lot of uses within the branch of data science, which then translates to other fields, especially in terms of business value.

Speech recognition

NLP is what lies behind speech recognition. By analyzing speech patterns, meaning, relationships, and classification of words, the algorithm is able to assemble the statement into a complete sentence. Using Deep Learning, you also get to “teach” the machine to recognize your accent or speech impairments to be more accurate. Additionally, the technology called Interactive Voice Response allows disabled people to communicate with machines much more easily.

Market analysis

NLP allows companies to determine current trends by analysing large amounts of available data. Using Topic Classification, the machine can find out what categories are the most common. Social media analysis, for example, can provide you with insights concerning your industry, product, or brand straight from the consumers’ point of view, which improves your business intelligence. You get to see what the sentiment is, which topics are the most usually talked about, what the opinion about your competitors is, the latest trends, and so on. And what better source of information than your audience?

Search results

Using NLP, search engines can determine the intent behind each query. Google utilises this technology to provide you with the best possible results. With the introduction of BERT in 2019, Google has considerably improved intent detection and context. This is especially useful for voice search, as the queries entered that way are usually far more conversational and natural. Google has incorporated BERT mainly because as many as 15% of queries entered daily have never been used before. As such, the algorithm doesn’t have much data regarding these queries, and NLP helps tremendously with establishing the intent.

Predictive text

NLP finds its use in day-to-day messaging by providing us with predictions about what we want to write. It allows applications to learn the way we write and improves functionality by giving us accurate recommendations for the next words.

Language translation

Online translators wouldn’t be possible without NLP. Remember a few years ago when software could only translate short sentences and individual words accurately? Well, that’s history. For example, Google Translate can convert entire pages fairly correctly to and from virtually any language.

Disease prediction

NLP is widely used in healthcare as a tool for making predictions of possible diseases. NLP algorithms can provide doctors with information concerning progressing illnesses such as depression or schizophrenia by interpreting speech patterns. Still, psychiatry is not the only field of medicine that NLP finds use in. Medical records are a tremendous source of information, and practitioners use NLP to detect diseases, improve the understanding of patients, facilitate care delivery, and cut costs.

Search Engine Optimisation

With NLP and BERT interconnected, the entire field of SEO has undergone considerable changes following the 2019 update. Context, search intent, and sentiment are currently far more important than they’ve been in the past. BERT has impacted about 10% of all queries, which is a tremendous number. This impact has shifted search intent behind them to a great degree, thus making the optimisation process and keyword research different.

The future of NLP

With the available information constantly growing in size and increasingly sophisticated, accurate algorithms, NLP is surely going to grow in popularity. It’s altering the way of interaction between humans and machines. The previously mentioned uses of NLP are proof of the fact that it’s a technology that improves our quality of life by a significant margin.

As much as 80% of the information that surrounds us is unstructured. For this reason, NLP is one of the largest fields of data science. Organising this data is a considerable challenge that’s being tackled daily by countless researchers. Continuous advancements are being made in the area of NLP, and we can expect it to affect more and more aspects of our lives.