Why quality assurance really matters?

We all know quality matters. Yet many companies treat quality assurance as a nice-to-have rather than as an integral part of their software development processes. History shows such an attitude may have far-reaching consequences, sometimes much more dramatic than we can imagine.

What is quality assurance?

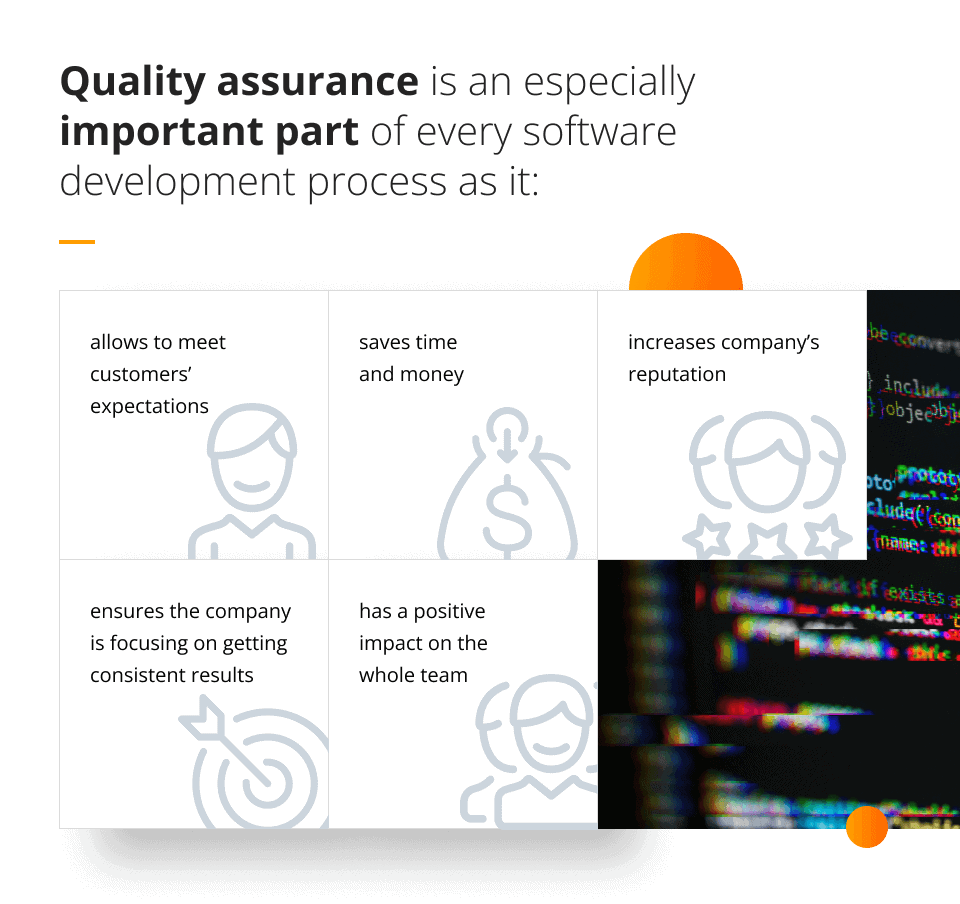

Quality assurance is a process of ensuring the product that is being developed has the right level of quality and that it meets customers’ expectations in terms of its functionality, reliability, efficiency, price, design etc.

In my previous post, Why is quality assurance important in software development?, I talked about the importance and benefits of a properly established quality assurance process.

When it comes to software development, the main risks related to not having the right level of quality assurance include:

- undetected bugs and errors,

- system misbehaviours,

- poor system security,

- unstable performance.

All those risks have an impact on how businesses operate and how they are perceived by their clients. Poor quality assurance may mean missed deadlines, financial losses, reputational damage, unsatisfied end users, less customers deciding to buy the product. But sometimes the consequences may be far more dramatic.

Real life examples of why quality assurance really matters

The history is full of events showing why quality assurance matters. Here are some of the most interesting ones.

The global financial crisis, 2008

The global financial crisis that happened in 2008 and the events that led to it are today known as the ‘greatest quality failure of all time‘. The phrase was first used by Paul Moore, Senior Risk and Compliance Executive at HBOS who was dismissed after having warned the board about the consequences of taking risks. Senior managers never saw the danger coming from the risky decisions they were taking, which led to the largest crisis that ever happened in the financial world.

As noted by the Reserve Bank of Australia, the crisis was caused by the excessive risk-taking in a favorable macroeconomic environment, increased borrowing by banks and investors, and regulation and policy errors.

There were several things that could have been done to improve the processes and quality to prevent the crisis:

- enhancing the quality of financial products and services, because the crisis was fueled in part by the proliferation of complex and risky financial products and services,

- strengthening the risk management processes, because developing better processes for identifying and mitigating risks, including stress testing and scenario analysis, could have helped to prevent the crisis,

- improving the quality and reliability of financial reporting, as investors and regulators were not fully aware of the true financial condition of many financial institutions,

- enhancing the quality and effectiveness of regulatory and policy frameworks,

- ensuring that all personnel are properly trained and competent.

Challenger Space Shuttle explosion, 1986

The Space Shutter Challenger was launched on 28th January 1986. Shortly after taking off, it exploded, destroying the vehicle, and killing all crew members. The explosion was a result of a bad decision-making process and the lack of quality assurance. A part of the vehicle, O-ring seals, was not ready to be used in cold temperatures, yet NASA officials, worried about political and financial consequences of not performing their task, decided to go ahead with their mission, despite the danger it was linked to.

In addition to the faulty O-ring seals, the disaster was also caused by several other factors, including the lack of communication and the lack of understanding within NASA about the risks associated with launching the Challenger in the cold weather.

There were also problems with the design and testing of the SRBs, as well as with the decision-making process within NASA that led to the launch being approved despite the known risks.

There were several things that could have been done to prevent the Challenger Space Shuttle disaster in 1986. Some of the most significant ones include:

- improving the design of the O-ring seals,

- testing the O-rings more thoroughly,

- developing better communication and decision-making processes within NASA,

- implementing better safety procedures and protocols,

- improving the design and testing of the solid rocket boosters.

BP Deepwater Horizon explosion and oil spill, 2010

BP’s Deepwater Horizon rig exploded on 20th April 2010 which immediately became known as one of the biggest environmental disasters in history. It killed eleven people and caused the discharging of four million oil barrels into the Gulf of Mexico, devastating the entire ecosystem.

As explained by Graham Freeman on his blog:

numerous post-incident documents report that many of the failures that led to the disaster were based on shortcomings in Quality planning. In particular, the BP Macondo well team neglected to follow the best practice of performing quality assurance and risk assessment of the design and testing of the cement casing, the integrity of which failed to prevent the initial escape of oil and gas that led to the blowout. Subsequent investigative journalism and testimony paints a picture in which the Culture of Quality was subordinated to cost-cutting and procedural shortcuts to try and get the drilling project, which was five weeks behind schedule, back on track.Graham Freeman

Here are some specific measures that could have been taken:

- implementing thorough testing and inspection protocols to ensure that all equipment and systems are properly tested and inspected before being put into service,

- adapting to industry standards and best practices for offshore drilling operations, including the American Petroleum Institute (API) and the International Association of Oil & Gas Producers (IOGP),

- better training and education of workers in the offshore drilling industry can help to ensure that they have the necessary knowledge and skills,

- regular safety drills and exercises,

- implementing robust safety systems such as blowout preventers, which are designed to prevent the uncontrolled release of oil and gas, can help to reduce the risk of accidents.

Failure of Boeing Co. 787 Dreamliner project, 2013

The Boeing 787 Dreamliner was introduced in 2011 and was supposed to change the entire air travel industry as a less expensive, more customer friendly and simply better option for air passengers. Yet in 2013 the entire Boeing 787 fleet was grounded, following several incidents of fire aboard some of the planes.

The causes of those incidents were complex and included problems with manufacturing, supply chain and organisational factors. All of them happened because of poor quality assurance – numerous documents and reports confirm that Boeing did not adopt an effective Reliability Program Plan, where best practice tasks would be implemented to produce a reliable product.

Some of the things that could have been done to prevent the failure of the project include:

- improving the design of the aircraft – The Boeing 787 Dreamliner had a number of innovative design features that also introduced new risks and challenges that were not adequately addressed,

- ensuring that manufacturing and assembly processes are robust and reliable,

- improving communication and decision-making processes within the company,

- developing better risk management processes.

Therac-25 killing patients

One of the scariest examples of a failure in quality assurance procedures was the Therac-25 case. This radiation therapy machine, which was produced in 1982, caused death of several patients due to massive overdoses of radiation it gave. As noted by Wikipedia, because of concurrent programming errors, the machine sometimes gave patients radiation doses that were hundreds of times greater than normal. The series of accidents showed the importance of software control standards, ethics, and due diligence to resolve software bugs.

It is important to note, that bugs in codes were not the root cause of this failure. The entire system design was flawed. The analysis of the failures aiming to understand how system will behave in different cases (the fault tree analysis) for the entire system was not conducted. Unit testing, which might catch bugs in codes on early stages, was not performed by the team.

The full analysis of the case can be found in the research paper: Medical Devices: Therac-25 by the Professor of Aeronautics and Astronautics Nancy Leveson from the University of Washington.

There are also some lessons learnt described, which may apply to different projects:

- the overconfidence in software,

- confusing reliability with safety,

- the lack of defensive design,

- failure to eliminate root causes,

- complacency and blind trust,

- unrealistic risk assessments,

- inadequate investigation or follow-up on accident reports,

- inadequate software engineering practices, especially in quality standards,

- software reuse does not guarantee safety,

- safe versus friendly user interface – sometimes making machines as easy to use as possible may conflict with safety.

Patriot Missile software accident

The accident occurred during the Gulf War in 1991, when the United States and its allies were engaged in a military conflict with Iraq. On February 25, 1991, a Patriot missile defense system operating at Dhahran, Saudi Arabia failed to track and intercept an incoming Scud missile, which subsequently hit the Army barracks, killing 28 Americans.

The failure of the Patriot missile defense system was a significant event that highlighted the challenges and limitations of the missile defense systems. It was later determined that the Patriot system experienced a software issue that caused it to ‘lock on’ to a single point on the ground rather than accurately tracking the movement of incoming missiles. This error was not detected during testing and was not identified until the system was deployed in a real-world scenario.

In response to the incident, the manufacturer of the Patriot missile defense system, Raytheon, implemented a number of changes to the system’s software and hardware in order to improve its performance and reliability.

The company also implemented more robust testing and quality assurance processes to help prevent similar problems from occurring in the future. The Patriot missile defense system has continued to be used by the United States and other countries around the world, but it remains subject to ongoing testing and improvement in order to ensure its effectiveness and reliability.

The detailed analysis of this incident can be found in the government report Patriot Missile Defense: Software Problem Led to System Failure at Dhahran, Saudi Arabia from 1992.

Conclusion

Quality failures can have serious and far-reaching consequences, as demonstrated by the numerous incidents described in this article. From the Challenger Space Shuttle explosion and the BP Deepwater Horizon oil spill to the global financial crisis and the Patriot missile defense system incident, these events highlight the importance of maintaining high standards of quality in all aspects of operations.

To prevent future incidents, it is essential to implement robust processes and procedures for ensuring the reliability and performance of products and services, as well as to continuously monitor and assess the quality. By taking these steps, organisations can prevent costly and potentially devastating incidents caused by quality failures.