Data preprocessing: a comprehensive step-by-step guide

Data preprocessing enhances data quality, resolves discrepancies, and ensures that the data is correct, consistent, and reliable.

Preparing raw data for further analysis or machine learning techniques is known as data preprocessing. A crucial step in the analytical process, it enhances data quality, resolves discrepancies, and ensures that the data is correct, consistent, and reliable. It sets the stage for the effective analysis and decision-making by establishing a solid basis for meaningful examination.

Let’s say you have a dataset containing information about square footage, number of bedrooms, location, and price of some houses. Before you can analyse this data or use it to train machine learning models to predict housing prices, you need to preprocess it. After completing the crucial steps, the data will be in a clean, consistent, and suitable format for further analysis – read on to find out what to do.

The role of data quality in preprocessing

Data analysis results rely heavily on the dataset’s accuracy, reliability, consistency, and completeness, all of which are directly tied to data quality. The preprocessing phase plays a crucial role in enhancing the quality of the data to prevent any negative impact on the outcomes of the analysis:

- None-responses or incomplete data when not handled, can underestimate the true value.

- Inaccurate values and mixed data descriptors can provide misleading insights and unreliable results.

- Duplicates can bias analysis outcomes and hence lead to the incorrect insights.

To address these challenges, organisations often rely on specialised data processing services.

Benefits of data preprocessing

Here are some key benefits of data preprocessing:

- Enhances predictions: Preprocessing data is a must when it comes to training machine learning models because it eliminates errors, inconsistencies, and irrelevant information. This ensures that models learn from high-quality data, leading to more adequate predictions.

- Streamlines analysis and modeling: Data preprocessing streamlines the machine learning pipeline by preparing data for analysis and modeling. This saves time and effort by minimising manual data cleaning and transformation when done for analytics phase.

- Improves interpretability: Preprocessing data enhances interpretability, making it easier to understand. This enables the identification of crucial patterns and relationships, aiding in better decision-making.

Challenges and risks of data preprocessing

Data preprocessing is not without its challenges and risks. What practitioners do not always say is that data preprocessing often takes most of the time while working on an analytical or machine learning project. Data cleaning and merging must be done carefully, so as not to introduce any discrepancies in the dataset.

Considering the time, effort, and possible risks, it is important to make sure that the data preprocessing is done centrally and that the results are distributed to the other teams.

Another risk is data leakage, which occurs when information from the test or validation data inadvertently influences the preprocessing steps, leading to overly optimistic results.

However, the dangers and difficulties of data preprocessing can be reduced through careful planning and validation at each stage.

Crucial steps of data preprocessing

Step 1: data cleaning for accurate insights

Data cleaning is a crucial step in preprocessing data to ensure accurate insights – simply put, it’s all about finding and fixing mistakes, discrepancies, and outliers in the values. The end goal is to have information that can be used in subsequent analyses that can be relied upon for accuracy and reliability.

It’s important to remember that data cleaning is a subjective process that should be tailored to the specific dataset and analysis goals. It’s therefore important to carefully consider the implications of each cleaning step and document the decisions made to maintain transparency and reproducibility in the analysis.

Some techniques for data cleaning include:

Handling missing data

Handling of missing values can be achieved by:

- Identification of missing values in the dataset and understand the extent of missingness.

- Deciding on missing value strategy, based on the nature of the data and the analysis goals. Options include imputing missing values with mean, median, mode, or using advanced imputation methods like regression or machine learning algorithms.

- Being cautious with imputation by carefully considering the implications of imputing missing values and understanding how it may impact the analysis results.

Detecting and treating outliers

Outliers, or data points that drastically differ from the majority, must be identified and addressed throughout the data cleaning process. Using statistical methods or visualisation tools, it is possible to identify and then remove, modify, or convert outlying data points. The choice of treatment depends on the context and goals of the analysis, considering the impact of outliers on the results.

Dealing with outliers can be done by:

- Identification of outliers: using visualisation techniques or statistical methods (e.g., box plots, z-scores),

- Investigating the causes of outliers to understand whether they are genuine extreme values or data entry errors,

- Deciding on outlier treatment, depending on the analysis goals and the nature of the outliers. It can be either removing them, transforming them, or treating them as missing values.

Eliminating duplicate data

Identifying and deleting duplicate records from a dataset is an important data preprocessing step. Duplicate information increases the risk of performing inconclusive research. The dataset’s quality for further analysis and modelling is enhanced by deleting duplicates, guaranteeing that each data point is unique and preventing data redundancy.

The best way to go about removing duplicates consists of:

- Identifying the duplicate records,

- Removing duplicates if they are not meaningful or if they can skew the analysis results. Be careful to ensure that removing duplicates is appropriate for the specific analysis task.

Step 2: data integration for comprehensive analysis

Integration involves bringing different data sets together into one cohesive unit. It can be challenging, because the data may have different types, structures, and meanings. The aim is to create a unified view of the information, even if it comes from various sources or is stored in different places. This allows for easier analysis and understanding of the data as a whole, enabling more comprehensive and insightful analysis.

The stages of data integration include:

Merging multiple data sources: enhancing data completeness

During the merging process data from many sources is brought together and combined. This procedure is crucial for establishing a single point of reference. However, due to the complexity of untangling numerous sources and addressing issues like redundancy, inconsistencies, and poor data quality, it can be a difficult task.

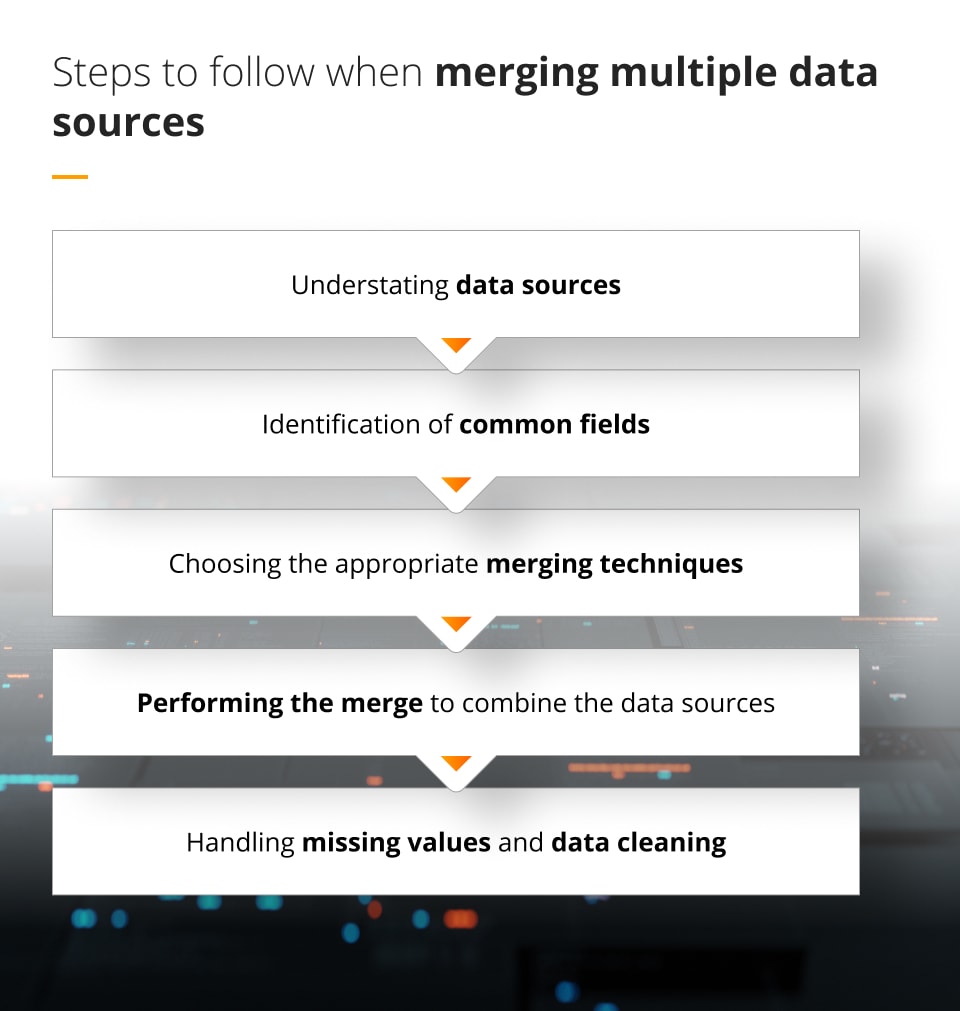

Steps to follow when merging multiple data sources include:

- Understating data sources, meaning familiarising yourself with the characteristics of each data source, including the structure, variables and data quality,

- Identification of common fields that have unique values or a high degree of overlap,

- Choosing the appropriate merging techniques based on the relationship between the common fields and the desired outcome (inner join, left join, right join, full outer join),

- Performing the merge to combine the data sources based on the common fields. This can be achieved with SQL joins, merging functions in programming languages or the appropriate methods based on the tools you are using,

- Handling missing values and data cleaning after the merge, applying data cleaning techniques.

Data consistency: resolving schema and format inconsistencies

Data organisation and structure differences between sources are major causes of discrepancies and can lead to erroneous analysis results. To overcome this difficulty, reduction methods can be used to remove unnecessary details from the combined dataset. Information consistency is maintained using data transformation techniques to normalise the schema and format.

Important steps in promoting data consistency include:

- Standardisation of data formats,

- Resolving inconsistent units of measurements,

- Handling inconsistent categorical variables,

- Reconciling discrepancies in data values,

- Ensuring consistent time zones,

- Aligning variable definitions,

- Using data validation checks.

Step 3: data transformation for actionable insights

Data transformation involves converting information into a suitable format for analysis. Data preprocessing techniques are used to modify the values and ensure they are organised effectively. It helps make the data consistent, comparable, and ready for downstream processes.

Feature scaling: balancing variables for optimal analysis

Feature scaling is performed during data preprocessing to handle highly varying magnitudes, values, or units. Without proper feature scaling, machine learning algorithms may mistakenly prioritise larger and downplay smaller values, regardless of their significance.

Some common methods for feature scaling include:

- Min-Max Caling (normalisation),

- Z-Score standardisation,

- Robust scaling,

- Log transformation.

Encoding categorical variables: converting qualitative data to numerical

Categorical data encoding is a way to convert words or labels into numbers so that machine learning models can understand and use them. In real-world data, we often have information described using categories or terms. However, models prefer working with numbers because they can perform calculations easily. Categorical data encoding converts these categories into numbers, allowing models to make predictions and analyse the data effectively.

Some common techniques for encoding categorical variables include:

- One-Hot Encoding

- Binary Encoding

- Ordinal Encoding

- Count Encoding

- Target Encoding

Step 4: dimensionality reduction for enhanced efficiency

Dimensionality reduction is like decluttering your dataset. When you have a lot of features or variables in your data, it can get overwhelming and confusing for the models to understand. So, dimensionality reduction removes unnecessary or repetitive information, making the data easier to analyse and saving storage space. By doing this, we can focus on the most important aspects of the information gathered and make better predictions or understand patterns more effectively.

Dimensionality reduction also helps in data exploration by reducing the dimensions of the data to a few dimensions, which allows for visualising the samples and obtaining insights from the values.

Some commonly used techniques for dimensionality reduction include:

- Principal Component Analysis (PCA), which identifies the directions along which the data varies the most.

- t-Distributed Stochastic Neighbor Embedding (t-SNE) – a non-linear dimensionality reduction technique commonly used for visualisation purposes. It aims to preserve the local structure and relationship between data points.

Visual aids for clear understanding

Visualisation in preprocessing refers to creating visual representations of data using charts, graphs, and plots. It helps us understand the information better by showing patterns, trends, and relationships between different variables. These visualisations help explore the values, find unusual or problematic ones, and decide on the best data modernisation solutions.

This technique allows analysts and data scientists to visually examine the data distribution, trends, and correlations, making it easier to identify any preprocessing steps that may be necessary.

Summary: why is data preprocessing important?

By improving data quality, resolving discrepancies, and preparing the information for analysis, preprocessing sets the stage for effective data analysis.

Data preprocessing becomes even more critical in machine learning and AI models as it ensures the models learn from accurate and reliable values, leading to accurate predictions.

Data solutions consulting providers can help organisations navigate the complexities of data preprocessing and ensure that it’s properly prepared for analysis and modelling. If you are keen to get advice on the best data preprocessing techniques or are curious to know how to make data preprocessing work to your advantage, do not hesitate to get in touch with our team.

As a company experienced in delivering our clients with data preprocessing at the highest standard, we will be happy to look into your needs and help you achieve your goals.