NLP techniques: key methods that will improve your analysis

Natural Language Processing is one of the most talked about subjects in today's world of innovation and data science, as it has a power to completely transform the way we work and live. What is it and how it can help you with your data analysis? Let's find out.

What is Natural Language Processing (NLP)?

Before we look into Natural Language Processing methods and how they can help you achieve your goals, let’s look at its definition and history.

The history of NLP dates back to the 1950s. The early years were marked by rule-based systems that relied on handcrafted linguistic rules to process and analyse text. The early 2000s witnessed a significant breakthrough in NLP with the rise of neural networks and deep learning, which revolutionised NLP by achieving great results in various tasks, including language understanding, machine translation, question answering, and sentiment analysis.

Another key milestone in NLP is the availability of large-scale pre-trained language models, such as OpenAI’s GPT (Generative Pre-trained Transformer) and Google’s BERT. These models are trained on massive amounts of text data, allowing them to learn rich contextual representations of language. Researchers and developers can fine-tune these models for specific tasks, enabling rapid progress in various NLP applications.

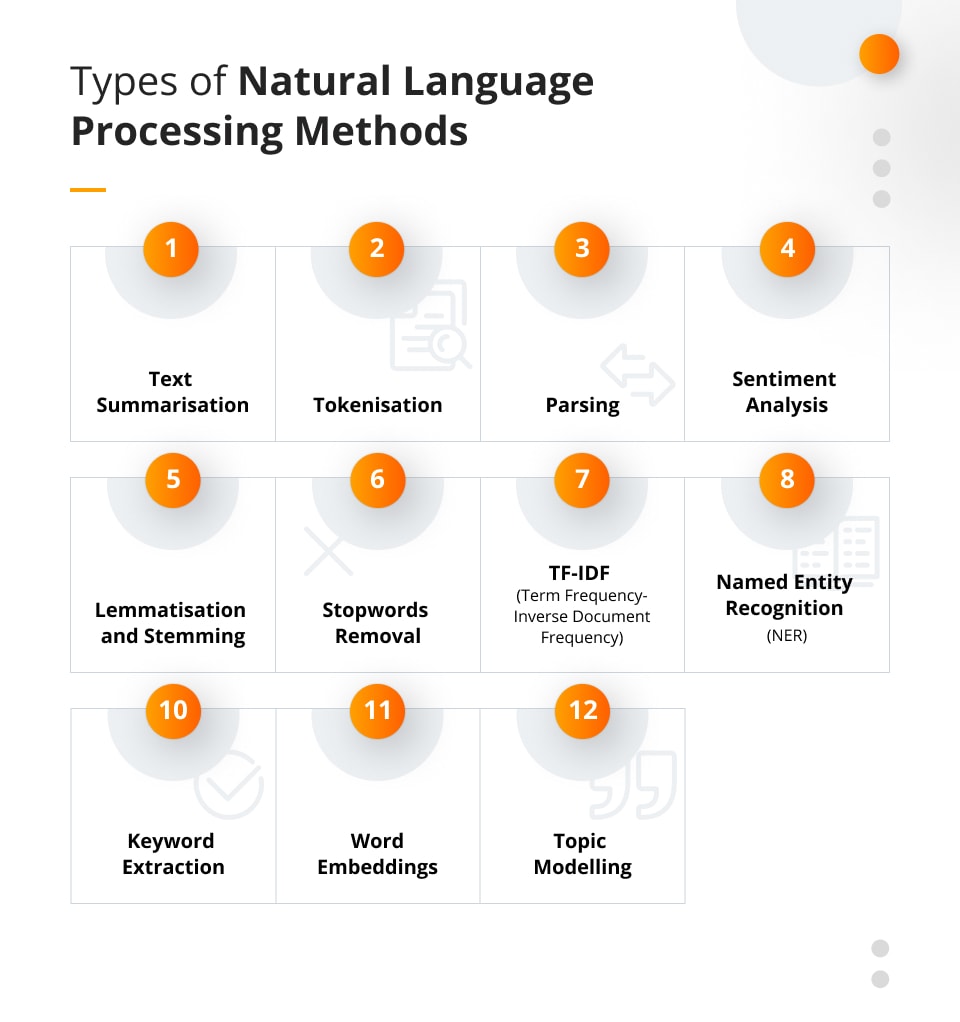

Types of Natural Language Processing methods

Natural Language Processing techniques can greatly enhance the analysis of textual data, which is the key when it comes to doing any kind of business. They can help you streamline your customer service or allow you to make more informed decisions thanks to thorough market analysis.

Some key methods that can improve your NLP analysis include:

Text Summarisation

NLP text summarisation is the process of generating a concise and coherent summary of a given document or text. It aims to capture the most important information and main ideas from the original text, while condensing it into a shorter form.

Tokenisation

Tokenisation is the process of breaking down a text into individual units called tokens, which can be words, phrases, or even characters. It forms the basis for most NLP tasks. Tokenisation helps in counting word frequencies, understanding sentence structure, and preparing input for further analysis.

Parsing

Parsing, also known as syntactic parsing or dependency parsing, is the process of analysing the grammatical structure of a sentence to determine the syntactic relationships between words. The goal of parsing is to understand the sentence’s structure and how the words relate to each other, which is crucial for various NLP tasks such as information extraction, question answering, machine translation, and sentiment analysis.

Sentiment Analysis

Sentiment analysis determines the emotional tone of a piece of text, typically classifying it as positive, negative, or neutral. It is useful for understanding public opinion, customer feedback analysis, and social media monitoring. Techniques for sentiment analysis include lexicon-based approaches, machine learning models, and deep learning methods.

Lemmatisation and Stemming

Lemmatisation and stemming are techniques used to reduce words to their base or root form. Lemmatisation converts words to their dictionary form (lemma), while stemming reduces words to their base form by removing prefixes or suffixes. This helps in reducing word variations and improving text coherence and analysis accuracy.

Stopwords Removal

Stopwords are common words like “and,” “the,” or “is” that don’t carry much meaning and can be safely ignored. Removing stopwords from text can reduce noise and improve the efficiency of subsequent analyses such as sentiment analysis or topic modeling.

TF-IDF (Term Frequency-Inverse Document Frequency)

TF-IDF (Term Frequency-Inverse Document Frequency) is a commonly used weighting scheme in Natural Language Processing (NLP) that quantifies the importance of a term in a document or a collection of documents. TF-IDF takes into account both the frequency of a term within a document (term frequency) and its rarity across the entire document collection (inverse document frequency).

Named Entity Recognition (NER)

NER identifies and classifies named entities (e.g., names, locations, organisations) in text. It helps in extracting structured information from unstructured text and is crucial for tasks like information retrieval, question answering, and entity relationship analysis.

Keyword Extraction

NLP keyword extraction, also called keyword detection or keyword analysis, is the process of automatically identifying and extracting the most important or relevant keywords or key phrases from a given document or text. Keywords are essential terms that represent the main concepts or topics in the text and can help in understanding its content, indexing it for search, or categorising it. As such, keyword extraction is often used to summarise the main message within a certain text.

Word Embeddings

Word embeddings represent words as dense vectors in a high-dimensional space, capturing their semantic relationships. Popular word embedding models like Word2Vec, GloVe, and fastText have been pre-trained on large text corpora and can be used to obtain vector representations of words. Word embeddings are valuable for tasks such as word similarity, document classification, and information retrieval.

Topic Modelling

Topic modelling is a statistical technique that uncovers latent topics or themes within a collection of documents. It helps in understanding the main subjects or discussions in a text corpus. Popular topic modelling algorithms include Latent Dirichlet Allocation (LDA) and Non-negative Matrix Factorisation (NMF).

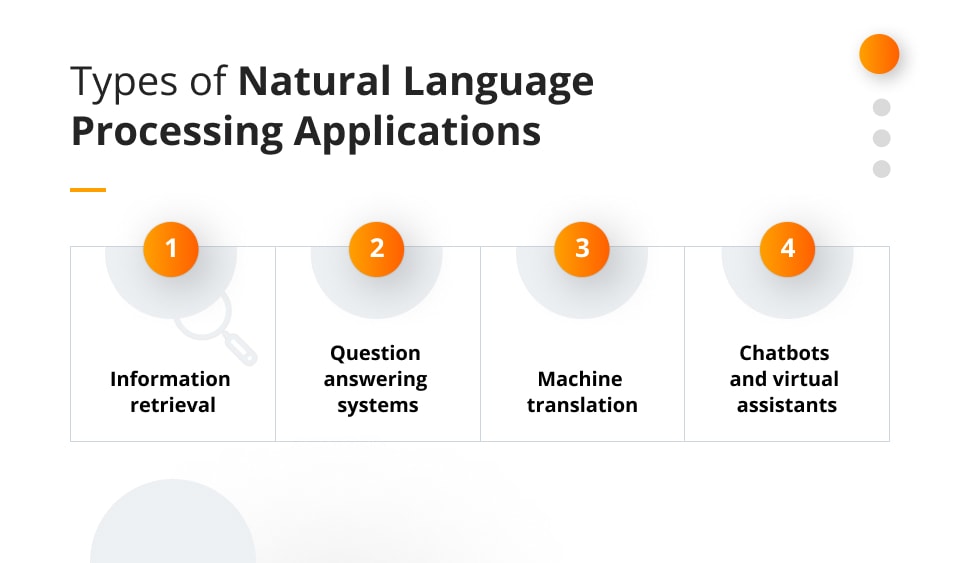

Natural Language Processing applications

Natural Language Processing (NLP) has a wide range of applications across various industries and domains. Some of the most common ones include:

Information retrieval

NLP is used to extract structured information from unstructured text. Named entity recognition, relationship extraction, and event extraction techniques are employed to identify and organise relevant information from documents, articles, or web pages. A great example of information retrieval in use is the financial sector where there is a need to quickly get real-time information from the stock exchange to stay ahead of competition.

Question answering systems

NLP powers question answering systems that can understand natural language queries and provide relevant answers. These systems are used in virtual assistants, customer support chatbots, and search engines to deliver accurate and concise responses to user queries.

Machine translation

NLP enables automatic translation of text from one language to another. Machine translation systems like Google Translate and DeepL use NLP techniques to analyse and understand the source text and generate a translated version in the target language. Machine translation can be used in document translations, it can also be used by companies that operate on international market and need to communicate with people speaking different languages.

Chatbots and virtual assistants

NLP is essential for building conversational agents like chatbots and virtual assistants. These systems utilise natural language understanding and generation to interact with users, answer questions, provide recommendations, or assist in various tasks. Sectors that make use of it are numerous, from retail to finance and medical: chatbots at banks or medical centers quickly provide customers with relevant information, without the need of speaking to specialists.

These are just a few examples of NLP applications. The field continues to advance rapidly, with new applications emerging in areas such as document understanding, natural language generation, language generation models, and conversational AI.

How NLP techniques are shaping the future of ML and AI?

NLP techniques are playing a significant role in shaping the future of machine learning (ML) and artificial intelligence (AI). They are like bridges between humans and machines, enabling more natural and intuitive interaction that enhances user experience, enables more seamless communication with machines and allows them to comprehend and interpret text in a way that was not possible before.

What’s more, NLP is facilitating communication and understanding across different languages. Machine translation models, powered by NLP techniques, are improving the accuracy and fluency of translations. Additionally, techniques like cross-lingual word embeddings and transfer learning are enabling knowledge transfer from one language to another, allowing models trained in one language to generalise to other languages with limited labeled data. These advancements are crucial for bridging language barriers and enabling global collaboration and access to information.

According to Statista, the NLP market is predicted to be almost 14 times larger in 2025 than it was in 2017, increasing from around three billion U.S. dollars in 2017 to over 43 billion in 2025. That’s an impressive fact that cannot be underestimated if you are planning to achieve success with your business.

To speak about possible NLP techniques and applications that can be beneficial to your organisation, do get in touch with us directly. Our dedicated ML consulting services will help you explore data-based opportunities while working with the best specialists in the field.